Content from The QuTech Software Maturity Model (QSMM)

Last updated on 2025-07-01 | Edit this page

QSMM

We frame our effort in this course in the context of the QuTech Software Maturity Model (QSMM). A software maturity model consists of structured levels that describes how well the behaviors, practices and processes of software development an organization can reliably and sustainably produce required outcomes. QSMM is a software maturity model specifically tailored for QuTech, based on TNO guidelines. QSMM consists of two sub-models:

- the software product maturity model

- the software development process maturity model

The purpose of this episode is to explain how software developed by researchers should fit into these models.

Software Product Maturity

This consists of the following levels:

| Category | Type | Purpose | Example |

|---|---|---|---|

| 1 | Prototype | Software developed for a specific analysis or to implement a concept (to generate feedback). | Small software product, a single (one-off) component created by one developer also being the primary user. The software may be reused by the developer but is not intended to be used by others and is not managed/maintained for the long term. |

| 2 | Proven | Software for engineering feasibility demonstrations (prove that a technology works) or developed as part of a research project or output of a research project. | Small software product consisting of 1 or few components that are used by a limited number of internal users (e.g., a project or research group). Long term maintenance is more important because the software is distributed wider and has a lifespan longer than the setting in which it was developed. |

| 3 | Mature | Strategic, business/research objectives driven systems internally used over a longer period. | Small projects delivering reliable software products that are used by a group of users and is often mission critical. When successful, the user base of an internal product may optionally be extended to external users. The software may also be used in hackathons. Long term maintenance is needed but organized within the user-group. |

| 4 | Commercial-like | Products (operational environment, external facing) | Large projects with complex, public facing software products developed within separate software development teams. Long term software maintenance is done, often by different software engineers than the original developers. |

Software Development Process Maturity

This consists of the following levels:

| Level | Name | Description |

|---|---|---|

| 1 | Undefined | Undefined level, no policies or procedures established for the software process. Ad hoc unpredictable software development. Poorly controlled and reactive. Success is effort of individuals (local heroes). |

| 2 | Repeatable | Repeatable level, basic project management established for software development (to track cost, functionality, and time). Development process decisions made are often reactive and based on intuition or experience instead of executing a predefined plan. The process is at least documented sufficiently such that repeating the same steps may be attempted. |

| 3 | Mature | Mature level. Software development process for management and engineering is documented. At this level, the software development process is defined clearly while at organizational level the processes are not standardized. |

| 4 | Defined | Defined level. Software development process for management and engineering are integrated in the organization. Projects tailor their processes from organization’s standards. The processes are qualitative measured and scored. |

| 5 | Managed | Managed level. The quality of the software process is quantitatively measured, so it can be evaluated and adjusted when necessary. |

| 6 | Optimizing | Optimizing level - continuous process improvement (based on data collected as described in the managed level, as well as investing in innovation). |

Goals for Research-Developed Software

- Most software developed by researchers will fall in the Category 1 and 2 in the Software Product Maturity levels. Goal is to make it possible to transition this to Category 3 and 4 if ever needed

- With respect to process maturity:

- Goal of this course is to make researches aware of development process levels, and the way they should be used

- Level 1 development should only apply to ad-hoc projects, typically developed over at most few days.

- For any other software development activities, researches should strive for at least Level 2, ideally Level 3 practices.

Group exercise

- List all libraries, software products that are used within your research group

- Categorize the components into home made and commercial

- Why do you pay for a certain commercial product?

- What level are the homemade libraries in? what level should they be in?

Content from Version Control

Last updated on 2025-07-07 | Edit this page

Overview

Questions

- What is version control and why should I use it?

- How do I get set up to use Git?

- Where does Git store information?

Objectives

- Understand the benefits of an automated version control system.

- Understand the basics of how automated version control systems work.

- Configure

gitthe first time it is used on a computer. - Understand the meaning of the

--globalconfiguration flag. - Create a local Git repository.

- Describe the purpose of the

.gitdirectory.

Motivation

Jimmy and Alfredo have been hired by Ratatouille restaurant (a special restaurant from Euphoric State University) to investigate if it is possible to make the best recipes archive ever. Before even starting, they make a plan of how they want to accomplish this task, and come up with the following requirements:

- The want to be able to work on recipes at the same time, with minimal coordination, and make sure they do not overwrite each other’s changes.

- The want to be able to look back at the history of a recipe, and see who has added what to that recipe.

- They also would like to be able, at any time, to go back to an older version of any recipe.

A colleague suggests using version control to manage

their work. Alfredo and Luigi look at what version control systems are

available, and end up choosing Git, since it is widely used

- it is pretty much the de-facto standard in this area. Throughout this

course we will follow Luigi and Alfredo on their journey learning and

using Git.

Automatic Version Control

What Is Automatic Version Control?

Automatic version control is a system that tracks changes to files over time, allowing multiple people to collaborate, revert to previous versions, and maintain a history of modifications. It is commonly used in software development to manage source code, but it can also be used for documents, configurations, and other digital assets.

Using version control has many benefits, the most important being:

Nothing that is committed to version control is ever lost, unless you work really, really hard at losing it. Since all old versions of files are saved, it’s always possible to go back in time to see exactly who wrote what on a particular day, or what version of a program was used to generate a particular set of results.

As we have this record of who made what changes when, we know who to ask if we have questions later on, and, if needed, revert to a previous version, much like the “undo” feature in an editor.

When several people collaborate in the same project, it’s possible to accidentally overlook or overwrite someone’s changes. The version control system automatically notifies users whenever there’s a conflict between one person’s work and another’s.

Teams are not the only ones to benefit from version control: lone researchers can benefit immensely. Keeping a record of what was changed, when, and why is extremely useful for all researchers if they ever need to come back to the project later on (e.g., a year later, when memory has faded).

Version control is the lab notebook of the digital world: it’s what professionals use to keep track of what they’ve done and to collaborate with other people. Every large software development project relies on it, and most programmers use it for their small jobs as well. And it isn’t just for software: books, papers, small data sets, and anything that changes over time or needs to be shared can and should be stored in a version control system.

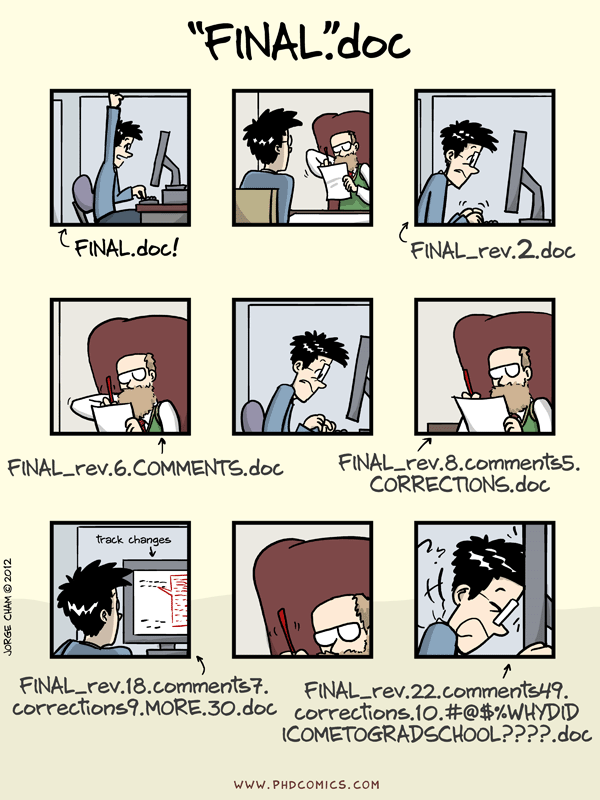

We’ll start by exploring how version control can be used to keep track of what one person did and when. Even if you aren’t collaborating with other people, automated version control is much better than this situation:

We’ve all been in this situation before: it seems unnecessary to have multiple nearly-identical versions of the same document. Some word processors let us deal with this a little better, such as Microsoft Word’s Track Changes, Google Docs’ version history, or LibreOffice’s Recording and Displaying Changes.

Version control systems start with a base version of the document and then record changes you make each step of the way. You can think of it as a recording of your progress: you can rewind to start at the base document and play back each change you made, eventually arriving at your more recent version.

Once you think of changes as separate from the document itself, you can then think about “playing back” different sets of changes on the base document, ultimately resulting in different versions of that document. For example, two users can make independent sets of changes on the same document.

Unless multiple users make changes to the same section of the document - a conflict - you can incorporate two sets of changes into the same base document.

It is the version control system that keeps track of these changes for us, by effectively creating different versions of our files. It allows us to decide which changes will be made to the next version (each record of these changes is called a commit), and keeps useful metadata about them. The complete history of commits for a particular project and their metadata make up a repository. Repositories can be kept in sync across different computers, facilitating collaboration among different people.

The Long History of Version Control Systems

Automated version control systems are nothing new. Tools like RCS, CVS, or Subversion have been around since the early 1980s and are used by many large companies. However, many of these are now considered legacy systems (i.e., outdated) due to various limitations in their capabilities. More modern systems, such as Git and Mercurial, are distributed, meaning that they do not need a centralized server to host the repository. These modern systems also include powerful merging tools that make it possible for multiple authors to work on the same files concurrently.

Paper Writing

Imagine you drafted an excellent paragraph for a paper you are writing, but later ruin it. How would you retrieve the excellent version of your conclusion? Is it even possible?

Imagine you have 5 co-authors. How would you manage the changes and comments they make to your paper? If you use LibreOffice Writer or Microsoft Word, what happens if you accept changes made using the

Track Changesoption? Do you have a history of those changes?

Recovering the excellent version is only possible if you created a copy of the old version of the paper. The danger of losing good versions often leads to the problematic workflow illustrated in the PhD Comics cartoon at the top of this page.

Collaborative writing with traditional word processors is cumbersome. Either every collaborator has to work on a document sequentially (slowing down the process of writing), or you have to send out a version to all collaborators and manually merge their comments into your document. The ‘track changes’ or ‘record changes’ option can highlight changes for you and simplifies merging, but as soon as you accept changes you will lose their history. You will then no longer know who suggested that change, why it was suggested, or when it was merged into the rest of the document. Even online word processors like Google Docs or Microsoft Office Online do not fully resolve these problems.

Key Points

- Version control is like an unlimited ‘undo’.

- Version control also allows many people to work in parallel.

Introducing Git

In this section we will move away from the general discussion on version control systems, and focus on one modern such system - namely Git - which over the past ten years has emerged as the de-facto standard in this area. One of the main goals of this course is to make you very comfortable with using git, which we believe will greatly help you towards the goal of making robust and reproducible research software. If you want to know more about how git has emerged as the dominant version control system, there are a few interesting articles on its history here, here, and here

Setting Up Git

Prerequisites

- In this episode we use Git from the Unix Shell. Some previous experience with the shell is expected, but isn’t mandatory.

- It is also assumed that you have already installed Git on your system. If this is not the case, please do this now, by following the download/installation instructions here

When we use Git on a new computer for the first time, we need to configure a few things. Below are a few examples of configurations we will set as we get started with Git:

- our name and email address,

- what our preferred text editor is,

- and that we want to use these settings globally (i.e. for every project).

On a command line, Git commands are written as

git verb options, where verb is what we

actually want to do and options is additional optional

information which may be needed for the verb. So here is

how Alfredo sets up git on his new laptop:

BASH

$ git config --global user.name "Alfredo Linguini"

$ git config --global user.email "a.linguini@ratatouille.fr"Please use your own name and email address instead of Alfredo’s. This user name and email will be associated with your subsequent Git activity, which means that any changes pushed to Git platforms, such as GitHub, BitBucket, GitLab or any other Git host server after this lesson will include this information.

For this lesson, we will be interacting with TU Delft GitLab instance and so the email address used should be your TUD email.

Line Endings

As with other keys, when you press Enter or ↵ or on Macs, Return on your keyboard, your computer encodes this input as a character. Different operating systems use different character(s) to represent the end of a line. (You may also hear these referred to as newlines or line breaks.) Because Git uses these characters to compare files, it may cause unexpected issues when editing a file on different machines. Though it is beyond the scope of this lesson, you can read more about this issue in the Pro Git book.

You can change the way Git recognizes and encodes line endings using

the core.autocrlf command to git config. The

following settings are recommended:

On macOS and Linux:

And on Windows:

Alfredo also has to set his favorite text editor, following this table:

| Editor | Configuration command |

|---|---|

| Atom | $ git config --global core.editor "atom --wait" |

| nano | $ git config --global core.editor "nano -w" |

| BBEdit (Mac, with command line tools) | $ git config --global core.editor "bbedit -w" |

| Sublime Text (Mac) | $ git config --global core.editor "/Applications/Sublime\ Text.app/Contents/SharedSupport/bin/subl -n -w" |

| Sublime Text (Win, 32-bit install) | $ git config --global core.editor "'c:/program files (x86)/sublime text 3/sublime_text.exe' -w" |

| Sublime Text (Win, 64-bit install) | $ git config --global core.editor "'c:/program files/sublime text 3/sublime_text.exe' -w" |

| Notepad (Win) | $ git config --global core.editor "c:/Windows/System32/notepad.exe" |

| Notepad++ (Win, 32-bit install) | $ git config --global core.editor "'c:/program files (x86)/Notepad++/notepad++.exe' -multiInst -notabbar -nosession -noPlugin" |

| Notepad++ (Win, 64-bit install) | $ git config --global core.editor "'c:/program files/Notepad++/notepad++.exe' -multiInst -notabbar -nosession -noPlugin" |

| Kate (Linux) | $ git config --global core.editor "kate" |

| Gedit (Linux) | $ git config --global core.editor "gedit --wait --new-window" |

| Scratch (Linux) | $ git config --global core.editor "scratch-text-editor" |

| Emacs | $ git config --global core.editor "emacs" |

| Vim | $ git config --global core.editor "vim" |

| VS Code | $ git config --global core.editor "code --wait" |

It is possible to reconfigure the text editor for Git whenever you

want to change it. To keep the presentation uniform, we will use

nano as our default editor for this workshop.

Git (2.28+) allows configuration of the name of the branch created

when you initialize any new repository. Alfredo decides to use that

feature to set it to main so it matches the cloud service

he will eventually use.

Default Git branch naming

Source file changes are associated with a “branch.” For new learners

in this lesson, it’s enough to know that branches exist, and this lesson

uses one branch. By default, Git will create a branch called

master when you create a new repository with

git init (as explained in the next section). This term

evokes the racist practice of human slavery and the software development

community has moved to adopt more inclusive language.

In 2020, most Git code hosting services transitioned to using

main as the default branch. As an example, any new

repository that is opened in GitHub and GitLab default to

main. However, Git has not yet made the same change. As a

result, local repositories must be manually configured have the same

main branch name as most cloud services.

For versions of Git prior to 2.28, the change can be made on an

individual repository level. The command for this is in the next

episode. Note that if this value is unset in your local Git

configuration, the init.defaultBranch value defaults to

master.

The five commands we just ran above only need to be run once: the

flag --global tells Git to use the settings for every

project, in your user account, on this computer.

Let’s review those settings with the list command:

If necessary, you change your configuration using the same commands to choose another editor or update your email address. This can be done as many times as you want.

Proxy

In some networks you need to use a proxy. If this is the case, you may also need to tell Git about the proxy:

To disable the proxy, use

Git Help and Manual

Always remember that if you forget the subcommands or options of a

git command, you can access the relevant list of options

typing git <command> -h or access the corresponding

Git manual by typing git <command> --help, e.g.:

While viewing the manual, remember the : is a prompt

waiting for commands and you can press Q to exit the

manual.

More generally, you can get the list of available git

commands and further resources of the Git manual typing:

Key Points

- Use

git configwith the--globaloption to configure a user name, email address, editor, and other preferences once per machine.

Creating a Git Repository

Once Git is configured, we can start using it.

We will help Alfredo with his new project, create a repository with all his recipes.

First, let’s create a new directory in the Desktop

folder for our work and then change the current working directory to the

newly created one:

Then we tell Git to make recipes a repository -- a place where Git can

store versions of our files:

It is important to note that git init will create a

repository that can include subdirectories and their files—there is no

need to create separate repositories nested within the

recipes repository, whether subdirectories are present from

the beginning or added later. Also, note that the creation of the

recipes directory and its initialization as a repository

are completely separate processes.

It is also important to understand that the Git repositories that you

will create and use in this episode and the next one are all

local - meaning they only exists on your computer, and can

only be used by you. In the next workshop we will show you how to

transition from a local repository to an online one - which will enable

you to collaborate with multiple colleagues working on the same

project.

If we use ls to show the directory’s contents, it

appears that nothing has changed:

But if we add the -a flag to show everything, we can see

that Git has created a hidden directory within recipes

called .git:

OUTPUT

. .. .gitGit uses this special subdirectory to store all the information about

the project, including the tracked files and sub-directories located

within the project’s directory. If we ever delete the .git

subdirectory, we will lose the project’s version control history.

We can now start using one of the most important git commands, which

is particularly helpful to beginners. git status tells us

the status of our project, and better, a list of changes in the project

and options on what to do with those changes. We can use it as often as

we want, whenever we want to understand what is going on.

OUTPUT

On branch main

No commits yet

nothing to commit (create/copy files and use "git add" to track)If you are using a different version of git, the exact

wording of the output might be slightly different.

Places to Create Git Repositories

Along with tracking information about recipes (the project we have

already created), Alfredo would also like to track information about

desserts specifically. Alfredo creates a desserts project

inside his recipes project with the following sequence of

commands:

BASH

$ cd ~/Desktop # return to Desktop directory

$ cd recipes # go into recipes directory, which is already a Git repository

$ ls -a # ensure the .git subdirectory is still present in the recipes directory

$ mkdir desserts # make a sub-directory recipes/desserts

$ cd desserts # go into desserts subdirectory

$ git init # make the desserts subdirectory a Git repository

$ ls -a # ensure the .git subdirectory is present indicating we have created a new Git repositoryIs the git init command, run inside the

desserts subdirectory, required for tracking files stored

in the desserts subdirectory?

No. Alfredo does not need to make the desserts

subdirectory a Git repository because the recipes

repository will track all files, sub-directories, and subdirectory files

under the recipes directory. Thus, in order to track all

information about desserts, Alfredo only needed to add the

desserts subdirectory to the recipes

directory.

Additionally, Git repositories can interfere with each other if they

are “nested”: the outer repository will try to version-control the inner

repository. Therefore, it’s best to create each new Git repository in a

separate directory. To be sure that there is no conflicting repository

in the directory, check the output of git status. If it

looks like the following, you are good to go to create a new repository

as shown above:

OUTPUT

fatal: Not a git repository (or any of the parent directories): .gitCorrecting git init Mistakes

Jimmy explains to Alfredo how a nested repository is redundant and

may cause confusion down the road. Alfredo would like to go back to a

single git repository. How can Alfredo undo his last

git init in the desserts subdirectory?

Background

Removing files from a Git repository needs to be done with caution. But we have not learned yet how to tell Git to track a particular file; we will learn this in the next episode. Files that are not tracked by Git can easily be removed like any other “ordinary” files with

Similarly a directory can be removed using

rm -r dirname. If the files or folder being removed in this

fashion are tracked by Git, then their removal becomes another change

that we will need to track, as we will see in the next episode.

Solution

Git keeps all of its files in the .git directory. To

recover from this little mistake, Alfredo can remove the

.git folder in the desserts subdirectory by running the

following command from inside the recipes directory:

But be careful! Running this command in the wrong directory will

remove the entire Git history of a project you might want to keep. In

general, deleting files and directories using rm from the

command line cannot be reversed. Therefore, always check your current

directory using the command pwd.

Key Points

-

git initinitializes a repository. - Git stores all of its repository data in the

.gitdirectory.

Command Line or Graphical Tools?

It is possible to use Git from either the command line

(e.g. GitBash) or through a variety of visual tools, such

as Sourcetree,

TortoiseGit,

SmartGit.

Furthermore, most modern integrated development environments such as

PyCharm and

VSCode

provide integrated visual Git tools.

Knowing how to use Git from the command line has definite benefits:

- Helps novice users become more comfortable with Git’s underlying structure and commands

- Gives more precise control at the low level (through command-line options)

- Equips users with the skills to troubleshoot and solve problems that may not be easily addressed with a GUI

- When searching for online resources on specific Git workflows, most information is available as command line instructions; same applies for help/instructions provided by AI Assistants such as ChatGPT, Gemini, or Claude

- GUI tools may change their menus/“look and feel” with new releases, while Git commands/options are much more stable

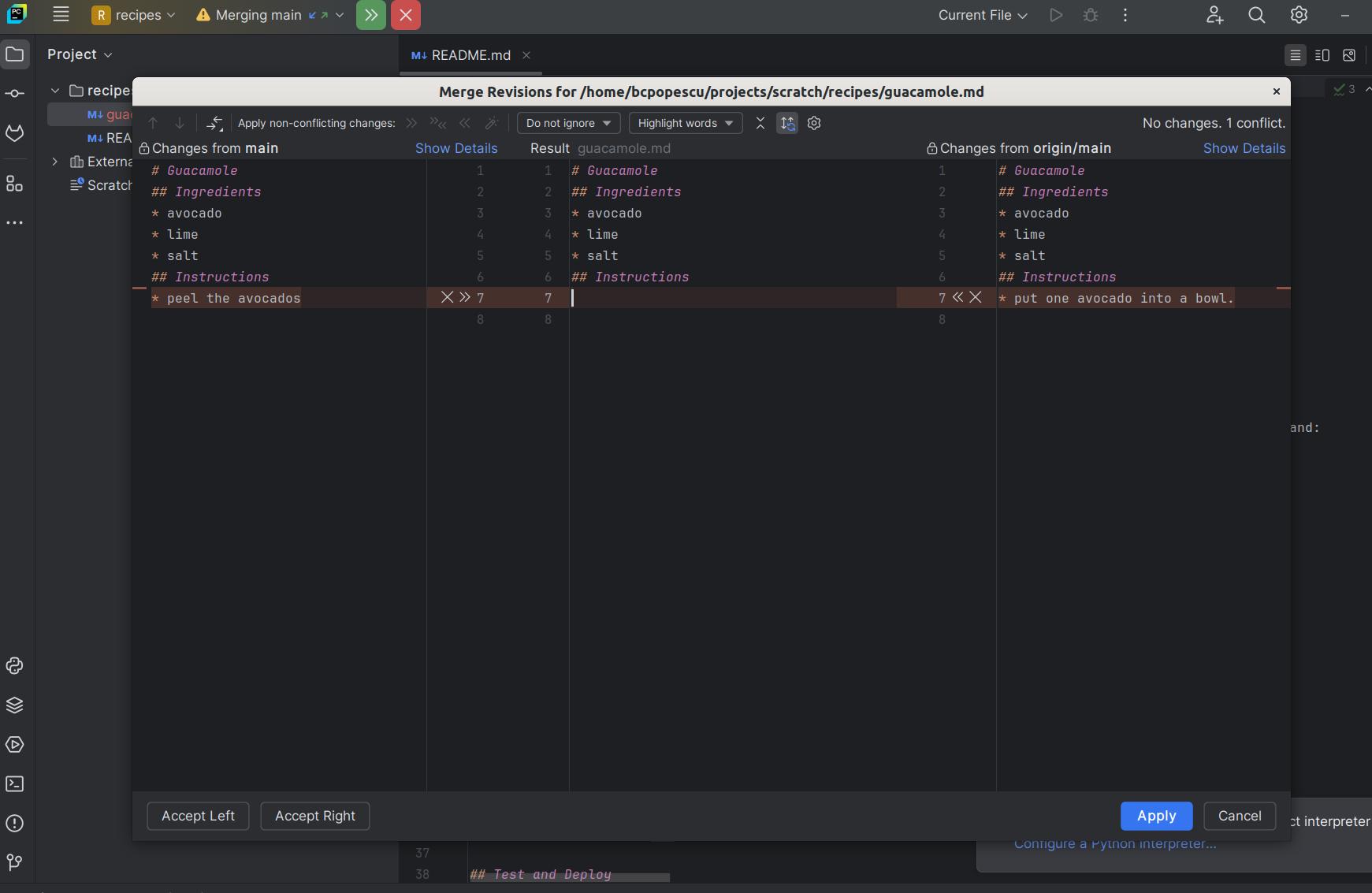

On the other hands, it is important to understand that visual Git tools can greatly improve the overall developer experience as well as increase productivity:

- Graphical Git tools offer an intuitive interface, making it easier for beginners to grasp basic concepts like branching, or viewing the repository’s history

- Certain advanced Git workflows, such as merging and solving conflicts become much more efficient and less error-prone when performed in a visual environment.

Given all these considerations, one of the goals of this course is to make participants comfortable with using Git from the command line, in order to provide them with a solid foundation upon which they can further expand their Git knowledge. At the same time, we are encouraging participants to explore Git visual tools. For the more advanced Git workflows (e.g. reviewing changes, merging, conflict resolution) we also explain how these workflows can be performed using the Git visual tools provided by the PyCharm development environment.

Challenge

Using PyCharm, open the folder containing the Git repository you created earlier in this episode. Locate the Git visual controls. Are they intuitive? Do you already recognize any workflows you could perform from Pycharm? We will cover some of these visual workflows in the following episodes.

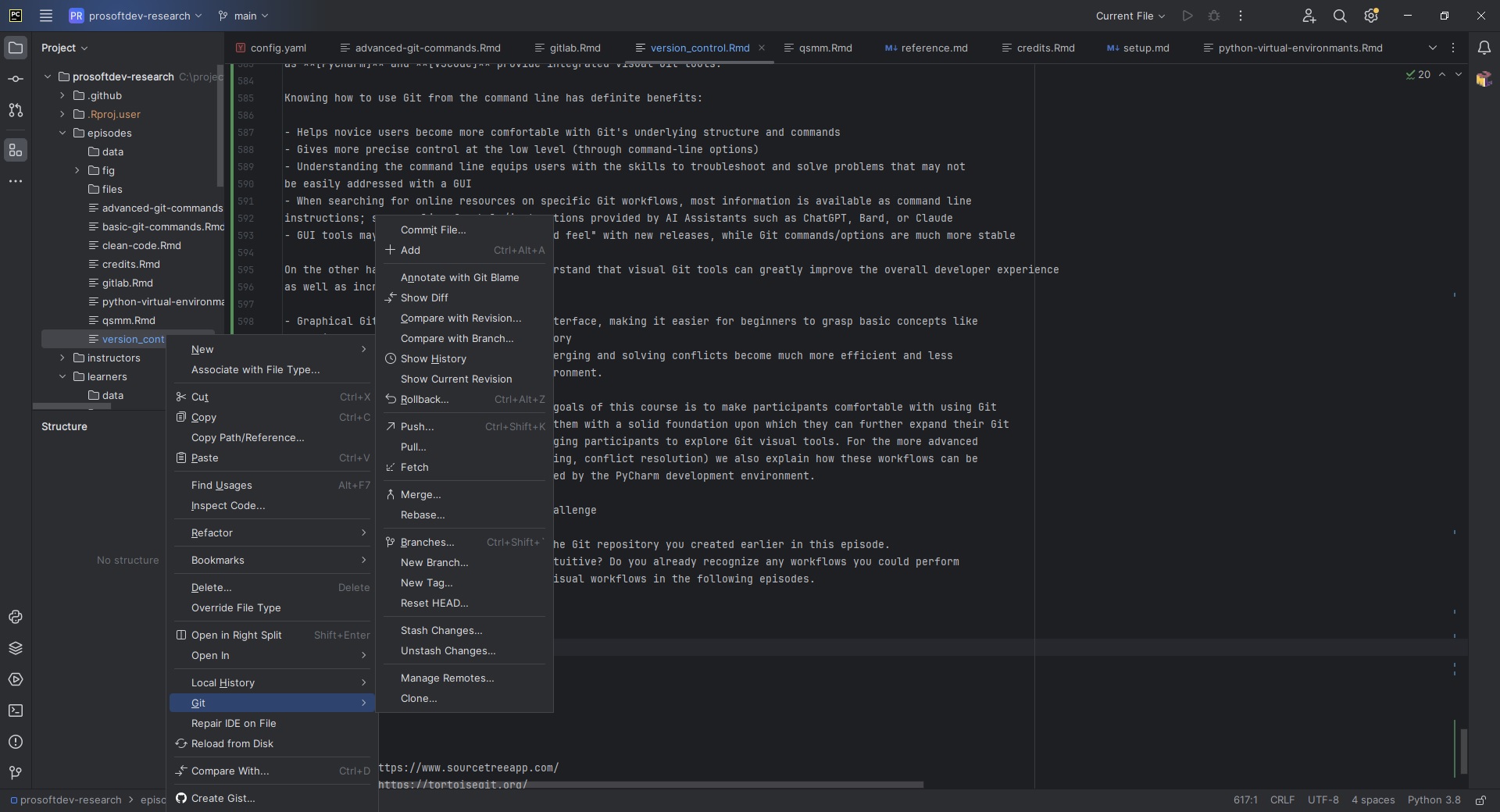

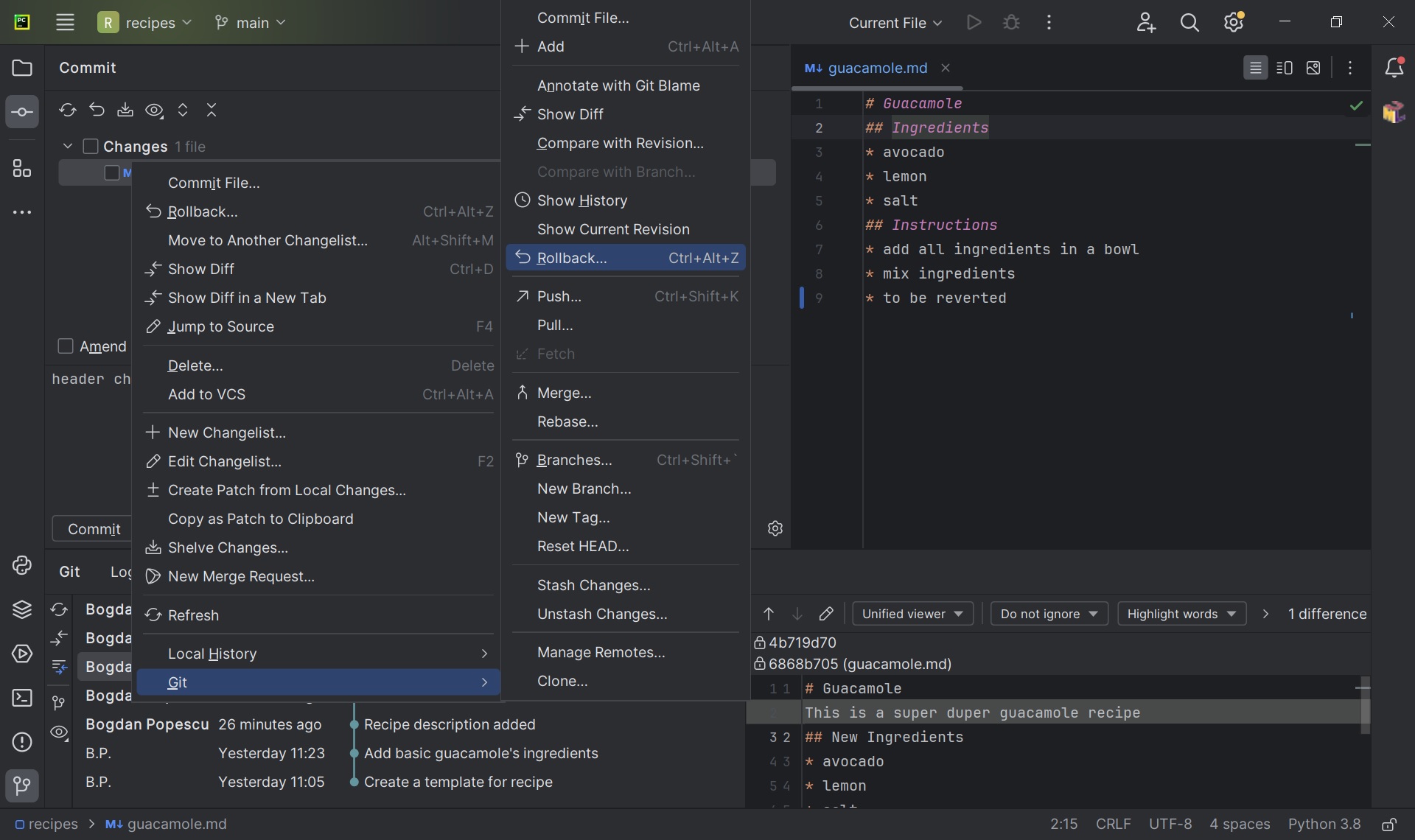

Accessing the Git visual tools in PyCharm is done by right-clicking

on a file/folder in the left navigation panel, then selecting

Git from the pop-up menu.

Key Points

- Use git from the command line for maximum control over workflows.

- Using visual tools for some of the advanced Git workflows will increase productivity and reduce errors.

Content from Basic Git Commands

Last updated on 2025-07-07 | Edit this page

Overview

Questions

- How do I record changes in Git?

- How do I record notes about what changes I made and why?

- How can I identify old versions of files?

- How do I review my changes?

- How can I recover old versions of files?

- How can I tell Git to ignore files I don’t want to track?

Objectives

- Go through the modify-add-commit cycle for one or more files.

- Explain where information is stored at each stage of that cycle.

- Distinguish between descriptive and non-descriptive commit messages.

- Explain what the HEAD of a repository is and how to use it.

- Compare various versions of tracked files.

- Restore old versions of files.

- Configure Git to ignore specific files.

The Modify-Add-Commit Cycle

In this episode we will continue working with the

recipes repository you have created in the previous

episode. First let’s make sure we’re still in the right directory. You

should be in the recipes directory.

Let’s create a file called guacamole.md that contains

the basic structure to have a recipe. We will use nano to

edit the file, but feel free to use another text editor if you prefer.

In particular, this does not have to be the core.editor you

set globally earlier. But remember, the steps to create create or edit a

new file will depend on the editor you choose (it might not be

nano).

Type the text below into the guacamole.md file:

OUTPUT

# Guacamole

## Ingredients

## InstructionsSave the file and exit your editor. Next, let’s verify that the file

was properly created by running the list command (ls):

OUTPUT

guacamole.mdguacamole.md contains three lines, which we can see by

running:

OUTPUT

# Guacamole

## Ingredients

## InstructionsIf we check the status of our project again, Git tells us that it’s noticed the new file:

OUTPUT

On branch main

No commits yet

Untracked files:

(use "git add <file>..." to include in what will be committed)

guacamole.md

nothing added to commit but untracked files present (use "git add" to track)The “untracked files” message means that there’s a file in the

directory that Git isn’t keeping track of. We can tell Git to track a

file using git add:

and then check that the right thing happened:

OUTPUT

On branch main

No commits yet

Changes to be committed:

(use "git rm --cached <file>..." to unstage)

new file: guacamole.md

Git now knows that it’s supposed to keep track of

guacamole.md, but it hasn’t recorded these changes as a

commit yet. To get it to do that, we need to run one more command:

OUTPUT

[main (root-commit) f22b25e] Create a template for recipe

1 file changed, 1 insertion(+)

create mode 100644 guacamole.mdWhen we run git commit, Git takes everything we have

told it to save by using git add and stores a copy

permanently inside the special .git directory. This

permanent copy is called a commit

(or revision) and its short

identifier is f22b25e. Your commit may have another

identifier.

We use the -m flag (for “message”) to record a short,

descriptive, and specific comment that will help us remember later on

what we did and why. If we just run git commit without the

-m option, Git will launch nano (or whatever

other editor we configured as core.editor) so that we can

write a longer message.

Good commit

messages start with a brief (<50 characters) statement about the

changes made in the commit. Generally, the message should complete the

sentence “If applied, this commit will”

If we run git status now:

OUTPUT

On branch main

nothing to commit, working tree cleanit tells us everything is up to date. If we want to know what we’ve

done recently, we can ask Git to show us the project’s history using

git log:

OUTPUT

commit f22b25e3233b4645dabd0d81e651fe074bd8e73b

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 09:51:46 2023 -0400

Create a template for recipegit log lists all commits made to a repository in

reverse chronological order. The listing for each commit includes the

commit’s full identifier (which starts with the same characters as the

short identifier printed by the git commit command

earlier), the commit’s author, when it was created, and the log message

Git was given when the commit was created.

Where Are My Changes?

If we run ls at this point, we will still see just one

file called guacamole.md. That’s because Git saves

information about files’ history in the special .git

directory mentioned earlier so that our filesystem doesn’t become

cluttered (and so that we can’t accidentally edit or delete an old

version).

Modifying tracked files

Now suppose Alfredo adds more information to the file. (Again, we’ll

edit with nano and then cat the file to show

its contents; you may use a different editor, and don’t need to

cat.)

OUTPUT

# Guacamole

## Ingredients

* avocado

* lemon

* salt

## InstructionsWhen we run git status now, it tells us that a file it

already knows about has been modified:

OUTPUT

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: guacamole.md

no changes added to commit (use "git add" and/or "git commit -a")The last line is the key phrase: “no changes added to commit”. We

have changed this file, but we haven’t told Git we will want to save

those changes (which we do with git add) nor have we saved

them (which we do with git commit). So let’s do that now.

It is good practice to always review our changes before saving them. We

do this using git diff. This shows us the differences

between the current state of the file and the most recently saved

version:

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index df0654a..315bf3a 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,3 +1,6 @@

# Guacamole

## Ingredients

+* avocado

+* lemon

+* salt

## InstructionsThe output is cryptic because it is actually a series of commands for

tools like editors and patch telling them how to

reconstruct one file given the other. If we break it down into

pieces:

- The first line tells us that Git is producing output similar to the

Unix

diffcommand comparing the old and new versions of the file. - The second line tells exactly which versions of the file Git is

comparing;

df0654aand315bf3aare unique computer-generated labels for those versions. - The third and fourth lines once again show the name of the file being changed.

- The remaining lines are the most interesting, they show us the

actual differences and the lines on which they occur. In particular, the

+marker in the first column shows where we added a line.

After reviewing our change, it’s time to commit it:

OUTPUT

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: guacamole.md

no changes added to commit (use "git add" and/or "git commit -a")Whoops: Git won’t commit because we didn’t use git add

first. Let’s fix that:

OUTPUT

[main 34961b1] Add basic guacamole's ingredient

1 file changed, 3 insertions(+)Git insists that we add files to the set we want to commit before actually committing anything. This allows us to commit our changes in stages and capture changes in logical portions rather than only large batches. For example, suppose we’re adding a few citations to relevant research to our thesis. We might want to commit those additions, and the corresponding bibliography entries, but not commit some of our work drafting the conclusion (which we haven’t finished yet).

To allow for this, Git has a special staging area where it keeps track of things that have been added to the current changeset but not yet committed.

Staging Area

If you think of Git as taking snapshots of changes over the life of a

project, git add specifies what will go in a

snapshot (putting things in the staging area), and

git commit then actually takes the snapshot, and

makes a permanent record of it (as a commit). If you don’t have anything

staged when you type git commit, Git will prompt you to use

git commit -a or git commit --all, which is

kind of like gathering everyone to take a group photo! However,

it’s almost always better to explicitly add things to the staging area,

because you might commit changes you forgot you made. (Going back to the

group photo simile, you might get an extra with incomplete makeup

walking on the stage for the picture because you used -a!)

Try to stage things manually, or you might find yourself searching for

“git undo commit” more than you would like!

Let’s watch as our changes to a file move from our editor to the staging area and into long-term storage. First, we’ll improve our recipe by changing ‘lemon’ to ‘lime’:

OUTPUT

# Guacamole

## Ingredients

* avocado

* lime

* salt

## InstructionsOUTPUT

diff --git a/guacamole.md b/guacamole.md

index 315bf3a..b36abfd 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,6 +1,6 @@

# Guacamole

## Ingredients

* avocado

-* lemon

+* lime

* salt

## InstructionsSo far, so good: we’ve replaced one line (shown with a -

in the first column) with a new line (shown with a + in the

first column). Now let’s put that change in the staging area and see

what git diff reports:

There is no output: as far as Git can tell, there’s no difference between what it’s been asked to save permanently and what’s currently in the directory. However, if we do this:

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index 315bf3a..b36abfd 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,6 +1,6 @@

# Guacamole

## Ingredients

* avocado

-* lemon

+* lime

* salt

## Instructionsit shows us the difference between the last committed change and what’s in the staging area. Let’s save our changes:

OUTPUT

[main 005937f] Modify guacamole to the traditional recipe

1 file changed, 1 insertion(+)check our status:

OUTPUT

On branch main

nothing to commit, working tree cleanand look at the history of what we’ve done so far:

OUTPUT

commit 005937fbe2a98fb83f0ade869025dc2636b4dad5 (HEAD -> main)

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 10:14:07 2023 -0400

Modify guacamole to the traditional recipe

commit 34961b159c27df3b475cfe4415d94a6d1fcd064d

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 10:07:21 2023 -0400

Add basic guacamole's ingredients

commit f22b25e3233b4645dabd0d81e651fe074bd8e73b

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 09:51:46 2023 -0400

Create a template for recipeWord-based diffing

Sometimes, e.g. in the case of the text documents a line-wise diff is

too coarse. That is where the --color-words option of

git diff comes in very useful as it highlights the changed

words using colors.

Paging the Log

When the output of git log is too long to fit in your

screen, git uses a program to split it into pages of the

size of your screen. When this “pager” is called, you will notice that

the last line in your screen is a :, instead of your usual

prompt.

- To get out of the pager, press Q.

- To move to the next page, press Spacebar.

- To search for

some_wordin all pages, press / and typesome_word. Navigate through matches pressing N.

Limit Log Size

To avoid having git log cover your entire terminal

screen, you can limit the number of commits that Git lists by using

-N, where N is the number of commits that you

want to view. For example, if you only want information from the last

commit you can use:

OUTPUT

commit 005937fbe2a98fb83f0ade869025dc2636b4dad5 (HEAD -> main)

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 10:14:07 2023 -0400

Modify guacamole to the traditional recipeYou can also reduce the quantity of information using the

--oneline option:

OUTPUT

005937f (HEAD -> main) Modify guacamole to the traditional recipe

34961b1 Add basic guacamole's ingredients

f22b25e Create a template for recipeYou can also combine the --oneline option with others.

One useful combination adds --graph to display the commit

history as a text-based graph and to indicate which commits are

associated with the current HEAD, the current branch

main, or other

Git references:

OUTPUT

* 005937f (HEAD -> main) Modify guacamole to the traditional recipe

* 34961b1 Add basic guacamole's ingredients

* f22b25e Create a template for recipeDirectories

Two important facts you should know about directories in Git.

- Git does not track directories on their own, only files within them. Try it for yourself:

Note, our newly created empty directory cakes does not

appear in the list of untracked files even if we explicitly add it

(via git add) to our repository. This is the

reason why you will sometimes see .gitkeep files in

otherwise empty directories. Unlike .gitignore, these files

are not special and their sole purpose is to populate a directory so

that Git adds it to the repository. In fact, you can name such files

anything you like.

- If you create a directory in your Git repository and populate it with files, you can add all files in the directory at once by:

Try it for yourself:

Before moving on, we will commit these changes.

To recap, when we want to add changes to our repository, we first

need to add the changed files to the staging area (git add)

and then commit the staged changes to the repository

(git commit):

Choosing a Commit Message

Which of the following commit messages would be most appropriate for

the last commit made to guacamole.md?

- “Changes”

- “Changed lemon for lime”

- “Guacamole modified to the traditional recipe”

Answer 1 is not descriptive enough, and the purpose of the commit is unclear; and answer 2 is redundant to using “git diff” to see what changed in this commit; but answer 3 is good: short, descriptive, and imperative.

Committing Changes to Git

Which command(s) below would save the changes of

myfile.txt to my local Git repository?

- Would only create a commit if files have already been staged.

- Would try to create a new repository.

- Is correct: first add the file to the staging area, then commit.

- Would try to commit a file “my recent changes” with the message myfile.txt.

Committing Multiple Files

The staging area can hold changes from any number of files that you want to commit as a single snapshot.

- Add some text to

guacamole.mdnoting the rough price of the ingredients. - Create a new file

groceries.mdwith a list of products and their prices for different markets. - Add changes from both files to the staging area, and commit those changes.

First we make our changes to the guacamole.md and

groceries.md files:

OUTPUT

# Guacamole

## Ingredients

* avocado (1.35)

* lime (0.64)

* salt (2)OUTPUT

# Market A

* avocado: 1.35 per unit.

* lime: 0.64 per unit

* salt: 2 per kgNow you can add both files to the staging area. We can do that in one line:

Or with multiple commands:

Now the files are ready to commit. You can check that using

git status. If you are ready to commit use:

OUTPUT

[main cc127c2]

Write prices for ingredients and their source

2 files changed, 7 insertions(+)

create mode 100644 groceries.mdKey Points

-

git statusshows the status of a repository. - Files can be stored in a project’s working directory (which users see), the staging area (where the next commit is being built up) and the local repository (where commits are permanently recorded).

-

git addputs files in the staging area. -

git commitsaves the staged content as a new commit in the local repository. - Write a commit message that accurately describes your changes.

Exploring History

As we saw in the previous episode, we can refer to commits by their

identifiers. You can refer to the most recent commit of the

working directory by using the identifier HEAD.

We’ve been adding small changes at a time to

guacamole.md, so it’s easy to track our progress by

looking, so let’s do that using our HEADs. Before we start,

let’s make a change to guacamole.md, adding yet another

line.

OUTPUT

# Guacamole

## Ingredients

* avocado

* lime

* salt

## Instructions

An ill-considered changeNow, let’s see what we get.

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index b36abfd..0848c8d 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -4,3 +4,4 @@

* lime

* salt

## Instructions

+An ill-considered changewhich is the same as what you would get if you leave out

HEAD (try it). The real goodness in all this is when you

can refer to previous commits. We do that by adding ~1

(where “~” is “tilde”, pronounced [til-duh])

to refer to the commit one before HEAD.

If we want to see the differences between older commits we can use

git diff again, but with the notation HEAD~1,

HEAD~2, and so on, to refer to them:

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index df0654a..b36abfd 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,3 +1,6 @@

# Guacamole

## Ingredients

+* avocado

+* lime

+* salt

## Instructions

+An ill-considered changeWe could also use git show which shows us what changes

we made at an older commit as well as the commit message, rather than

the differences between a commit and our working directory that

we see by using git diff.

OUTPUT

commit f22b25e3233b4645dabd0d81e651fe074bd8e73b

Author: Alfredo Linguini <a.linguini@ratatouille.fr>

Date: Thu Aug 22 10:07:21 2023 -0400

Create a template for recipe

diff --git a/guacamole.md b/guacamole.md

new file mode 100644

index 0000000..df0654a

--- /dev/null

+++ b/guacamole.md

@@ -0,0 +1,3 @@

+# Guacamole

+## Ingredients

+## InstructionsIn this way, we can build up a chain of commits. The most recent end

of the chain is referred to as HEAD; we can refer to

previous commits using the ~ notation, so

HEAD~1 means “the previous commit”, while

HEAD~123 goes back 123 commits from where we are now.

We can also refer to commits using those long strings of digits and

letters that both git log and git show

display. These are unique IDs for the changes, and “unique” really does

mean unique: every change to any set of files on any computer has a

unique 40-character identifier. Our first commit was given the ID

f22b25e3233b4645dabd0d81e651fe074bd8e73b, so let’s try

this:

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index df0654a..93a3e13 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,3 +1,7 @@

# Guacamole

## Ingredients

+* avocado

+* lime

+* salt

## Instructions

+An ill-considered changeThat’s the right answer, but typing out random 40-character strings is annoying, so Git lets us use just the first few characters (typically seven for normal size projects):

OUTPUT

diff --git a/guacamole.md b/guacamole.md

index df0654a..93a3e13 100644

--- a/guacamole.md

+++ b/guacamole.md

@@ -1,3 +1,7 @@

# Guacamole

## Ingredients

+* avocado

+* lime

+* salt

## Instructions

+An ill-considered changeAll right! So we can save changes to files and see what we’ve

changed. Now, how can we restore older versions of things? Let’s suppose

we change our mind about the last update to guacamole.md

(the “ill-considered change”).

git status now tells us that the file has been changed,

but those changes haven’t been staged:

OUTPUT

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: guacamole.md

no changes added to commit (use "git add" and/or "git commit -a")We can put things back the way they were by using

git restore:

OUTPUT

# Guacamole

## Ingredients

* avocado

* lime

* salt

## InstructionsAs you might guess from its name, git restore restores

an old version of a file. By default, it recovers the version of the

file recorded in HEAD, which is the last saved commit. If

we want to go back even further, we can use a commit identifier instead,

using -s option:

OUTPUT

# Guacamole

## Ingredients

## InstructionsOUTPUT

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: guacamole.md

no changes added to commit (use "git add" and/or "git commit -a")

Notice that the changes are not currently in the staging area, and

have not been committed. If we wished, we can put things back the way

they were at the last commit by using git restore to

overwrite the working copy with the last committed version:

OUTPUT

# Guacamole

## Ingredients

* avocado

* lime

* salt

## InstructionsIt’s important to remember that we must use the commit number that

identifies the state of the repository before the change we’re

trying to undo. A common mistake is to use the number of the commit in

which we made the change we’re trying to discard. In the example below,

we want to retrieve the state from before the most recent commit

(HEAD~1), which is commit f22b25e. We use the

. to mean all files:

So, to put it all together, here’s how Git works in cartoon form:

The fact that files can be reverted one by one tends to change the way people organize their work. If everything is in one large document, it’s hard (but not impossible) to undo changes to the introduction without also undoing changes made later to the conclusion. If the introduction and conclusion are stored in separate files, on the other hand, moving backward and forward in time becomes much easier.

Recovering Older Versions of a File

Jennifer has made changes to the Python script that she has been working on for weeks, and the modifications she made this morning “broke” the script and it no longer runs. She has spent ~ 1hr trying to fix it, with no luck…

Luckily, she has been keeping track of her project’s versions using

Git! Which commands below will let her recover the last committed

version of her Python script called data_cruncher.py?

$ git restore$ git restore data_cruncher.py$ git restore -s HEAD~1 data_cruncher.py$ git restore -s <unique ID of last commit> data_cruncher.pyBoth 2 and 4

The answer is (5)-Both 2 and 4.

The restore command restores files from the repository,

overwriting the files in your working directory. Answers 2 and 4 both

restore the latest version in the repository of the

file data_cruncher.py. Answer 2 uses HEAD to

indicate the latest, whereas answer 4 uses the unique ID of the

last commit, which is what HEAD means.

Answer 3 gets the version of data_cruncher.py from the

commit before HEAD, which is NOT what we

wanted.

Answer 1 results in an error. You need to specify a file to restore.

If you want to restore all files you should use

git restore .

Reverting a Commit

Jennifer is collaborating with colleagues on her Python script. She

realizes her last commit to the project’s repository contained an error,

and wants to undo it. Jennifer wants to undo correctly so everyone in

the project’s repository gets the correct change. The command

git revert [erroneous commit ID] will create a new commit

that reverses the erroneous commit.

The command git revert is different from

git restore -s [commit ID] . because

git restore returns the files not yet committed within the

local repository to a previous state, whereas git revert

reverses changes committed to the local and project repositories.

Below are the right steps and explanations for Jennifer to use

git revert, what is the missing command?

________ # Look at the git history of the project to find the commit IDCopy the ID (the first few characters of the ID, e.g. 0b1d055).

git revert [commit ID]Type in the new commit message.

Save and close.

The command git log lists project history with commit

IDs.

The command git show HEAD shows changes made at the

latest commit, and lists the commit ID; however, Jennifer should

double-check it is the correct commit, and no one else has committed

changes to the repository.

Understanding Workflow and History

What is the output of the last command in

BASH

$ cd recipes

$ echo "I like tomatoes, therefore I like ketchup" > ketchup.md

$ git add ketchup.md

$ echo "ketchup enhances pasta dishes" >> ketchup.md

$ git commit -m "My opinions about the red sauce"

$ git restore ketchup.md

$ cat ketchup.md # this will print the content of ketchup.md on screenOUTPUT

ketchup enhances pasta dishesOUTPUT

I like tomatoes, therefore I like ketchupOUTPUT

I like tomatoes, therefore I like ketchup ketchup enhances pasta dishesOUTPUT

Error because you have changed ketchup.md without committing the changes

The answer is 2.

The changes to the file from the second echo command are

only applied to the working copy, The command

git add ketchup.md places the current version of

ketchup.md into the staging area. not the version in the

staging area.

So, when git commit -m "My opinions about the red sauce"

is executed, the version of ketchup.md committed to the

repository is the one from the staging area and has only one line.

At this time, the working copy still has the second line (and

git status will show that the file is modified). However,

git restore ketchup.md replaces the working copy with the

most recently committed version of ketchup.md. So,

cat ketchup.md will output

OUTPUT

I like tomatoes, therefore I like ketchupChecking Understanding of

git diff

Consider this command: git diff HEAD~9 guacamole.md.

What do you predict this command will do if you execute it? What happens

when you do execute it? Why?

Try another command, git diff [ID] guacamole.md, where

[ID] is replaced with the unique identifier for your most recent commit.

What do you think will happen, and what does happen?

Getting Rid of Staged Changes

git restore can be used to restore a previous commit

when unstaged changes have been made, but will it also work for changes

that have been staged but not committed? Make a change to

guacamole.md, add that change using git add,

then use git restore to see if you can remove your

change.

After adding a change, git restore can not be used

directly. Let’s look at the output of git status:

OUTPUT

On branch main

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

modified: guacamole.md

Note that if you don’t have the same output you may either have forgotten to change the file, or you have added it and committed it.

Using the command git restore guacamole.md now does not

give an error, but it does not restore the file either. Git helpfully

tells us that we need to use git restore --staged first to

unstage the file:

Now, git status gives us:

OUTPUT

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git git restore <file>..." to discard changes in working directory)

modified: guacamole.md

no changes added to commit (use "git add" and/or "git commit -a")This means we can now use git restore to restore the

file to the previous commit:

OUTPUT

On branch main

nothing to commit, working tree cleanKey Points

-

git diffdisplays differences between commits. -

git restorerecovers old versions of files.

Ignoring Things

What if we have files that we do not want Git to track for us, like backup files created by our editor or intermediate files created during data analysis? Let’s create a few dummy files:

and see what Git says:

OUTPUT

On branch main

Untracked files:

(use "git add <file>..." to include in what will be committed)

a.png

b.png

c.png

receipts/

nothing added to commit but untracked files present (use "git add" to track)Putting these files under version control would be a waste of disk space. What’s worse, having them all listed could distract us from changes that actually matter, so let’s tell Git to ignore them.

We do this by creating a file in the root directory of our project

called .gitignore:

OUTPUT

*.png

receipts/These patterns tell Git to ignore any file whose name ends in

.png and everything in the receipts directory.

(If any of these files were already being tracked, Git would continue to

track them.)

Once we have created this file, the output of git status

is much cleaner:

OUTPUT

On branch main

Untracked files:

(use "git add <file>..." to include in what will be committed)

.gitignore

nothing added to commit but untracked files present (use "git add" to track)The only thing Git notices now is the newly-created

.gitignore file. You might think we wouldn’t want to track

it, but everyone we’re sharing our repository with will probably want to

ignore the same things that we’re ignoring. Let’s add and commit

.gitignore:

OUTPUT

On branch main

nothing to commit, working tree cleanAs a bonus, using .gitignore helps us avoid accidentally

adding files to the repository that we don’t want to track:

OUTPUT

The following paths are ignored by one of your .gitignore files:

a.png

Use -f if you really want to add them.If we really want to override our ignore settings, we can use

git add -f to force Git to add something. For example,

git add -f a.csv. We can also always see the status of

ignored files if we want:

OUTPUT

On branch main

Ignored files:

(use "git add -f <file>..." to include in what will be committed)

a.png

b.png

c.png

receipts/

nothing to commit, working tree cleanIf you only want to ignore the contents of

receipts/plots, you can change your .gitignore

to ignore only the /plots/ subfolder by adding the

following line to your .gitignore:

OUTPUT

receipts/plots/This line will ensure only the contents of

receipts/plots is ignored, and not the contents of

receipts/data.

Including Specific Files

How would you ignore all .png files in your root

directory except for final.png? Hint: Find out what

! (the exclamation point operator) does

You would add the following two lines to your .gitignore:

OUTPUT

*.png # ignore all png files

!final.png # except final.pngThe exclamation point operator will include a previously excluded entry.

Note also that because you’ve previously committed .png

files in this lesson they will not be ignored with this new rule. Only

future additions of .png files added to the root directory

will be ignored.

Ignoring all data Files in a Directory

Assuming you have an empty .gitignore file, and given a directory structure that looks like:

BASH

receipts/data/market_position/gps/a.dat

receipts/data/market_position/gps/b.dat

receipts/data/market_position/gps/c.dat

receipts/data/market_position/gps/info.txt

receipts/plotsWhat’s the shortest .gitignore rule you could write to

ignore all .dat files in

receipts/data/market_position/gps? Do not ignore the

info.txt.

Appending receipts/data/market_position/gps/*.dat will

match every file in receipts/data/market_position/gps that

ends with .dat. The file

receipts/data/market_position/gps/info.txt will not be

ignored.

Ignoring all data Files in the repository

Let us assume you have many .csv files in different

subdirectories of your repository. For example, you might have:

BASH

results/a.csv

data/experiment_1/b.csv

data/experiment_2/c.csv

data/experiment_2/variation_1/d.csvHow do you ignore all the .csv files, without explicitly

listing the names of the corresponding folders?

In the .gitignore file, write:

OUTPUT

**/*.csvThis will ignore all the .csv files, regardless of their

position in the directory tree. You can still include some specific

exception with the exclamation point operator.

Practice using graphical Git tools - ignore PyCharm internal files

Open your recipes repo in PyCharm.

- does PyCharm create any additional files/directories in your repo?

- configure Git to ignore this additional PyCharm content

- PyCharm creates a

.idea/sub-directory in your repo directory - To exclude it from Git, you can add this line to your

.gitignore:

OUTPUT

.idea/Key Points

- The .gitignore file is a text file that tells Git which files to track and which to ignore in the repository.

- You can list specific files or folders to be ignored by Git, or you can include files that would normally be ignored.

Practice using graphical Git tools

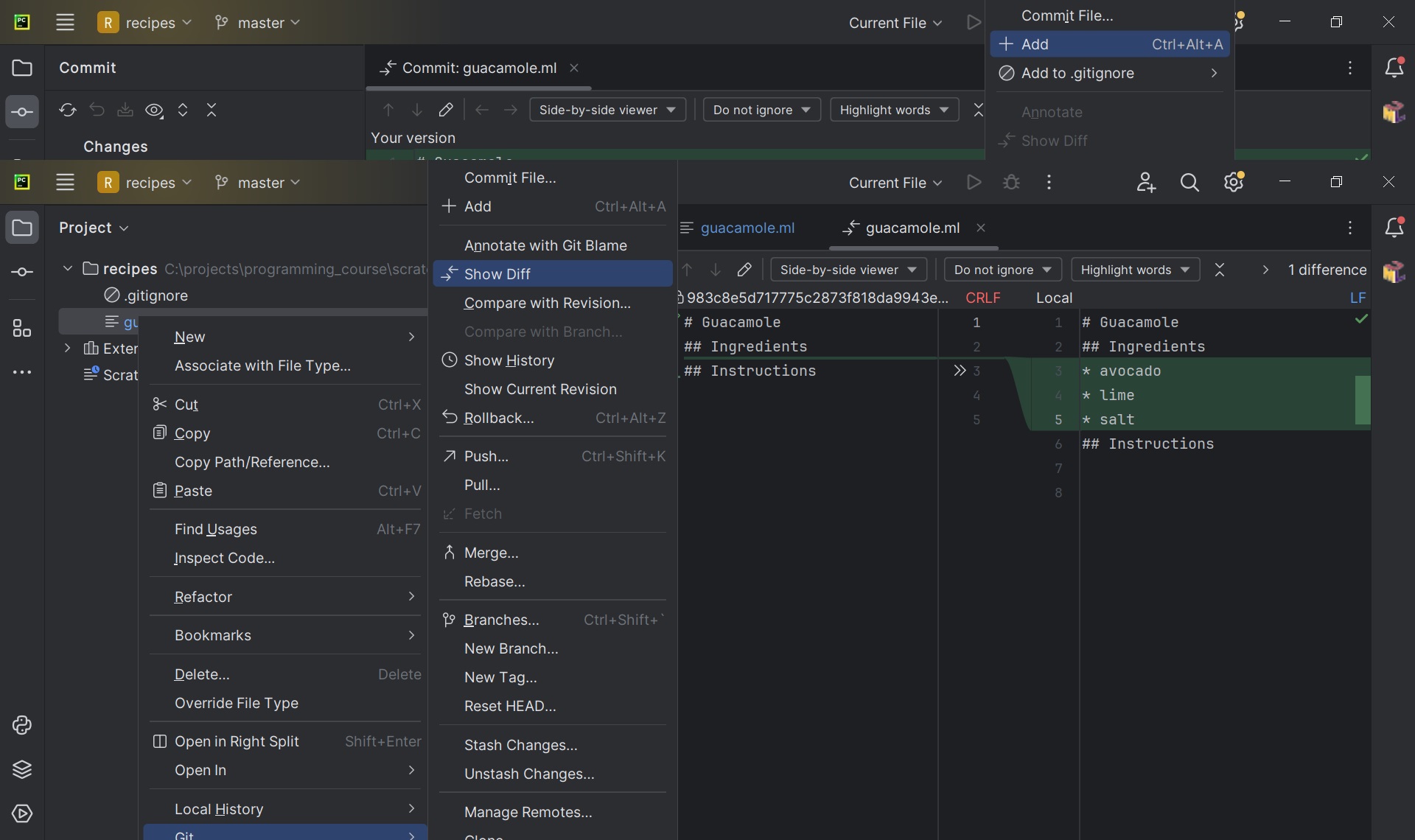

Using the graphical Git tools in PyCharm repeat the basic Git commands explained so far:

- In the “recipes” repo, modify the

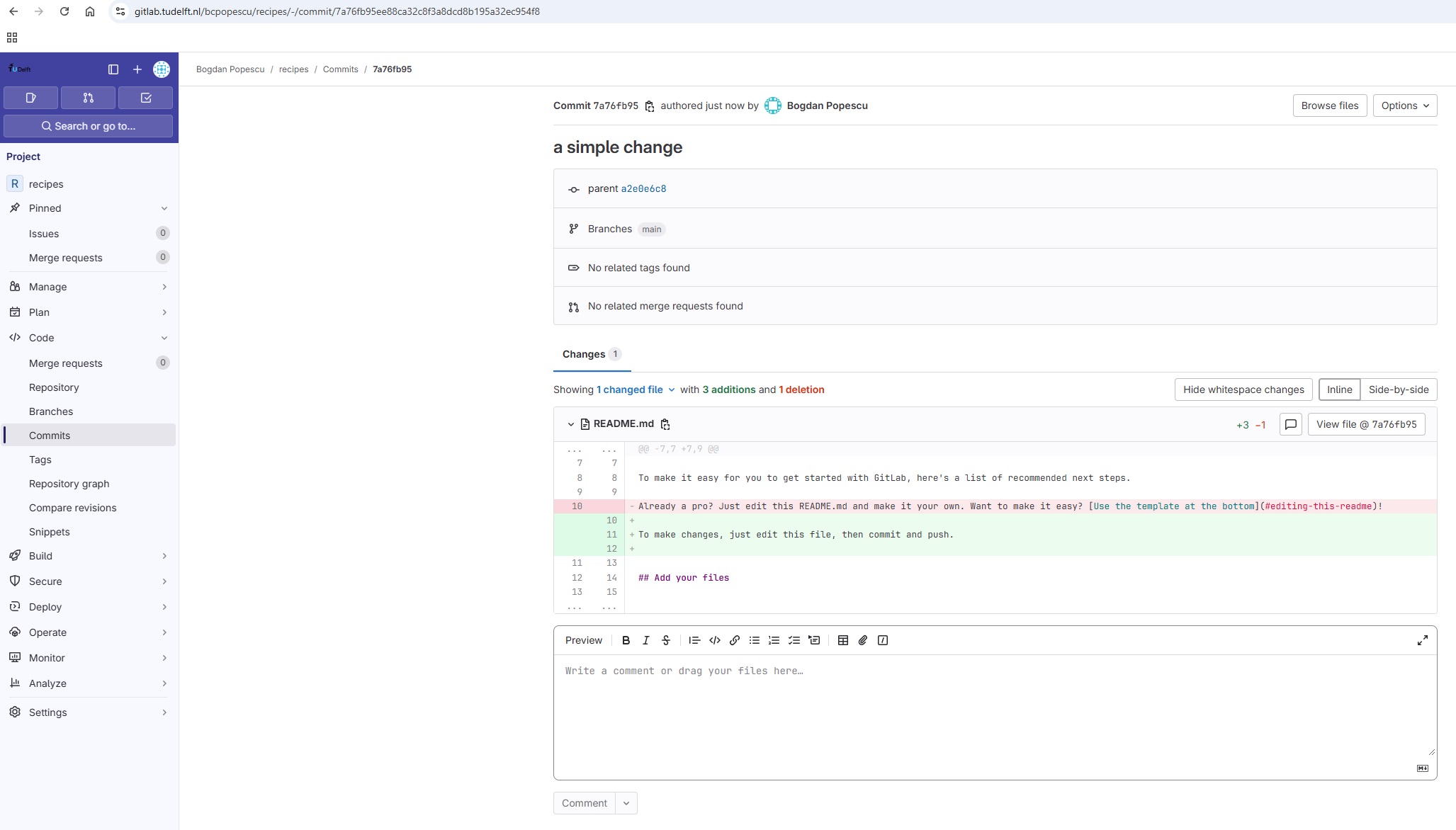

guacamole.mdrecipe. - Visually inspect what has been changed in the file.

- Commit the file, and visually inspect the Git commit log.

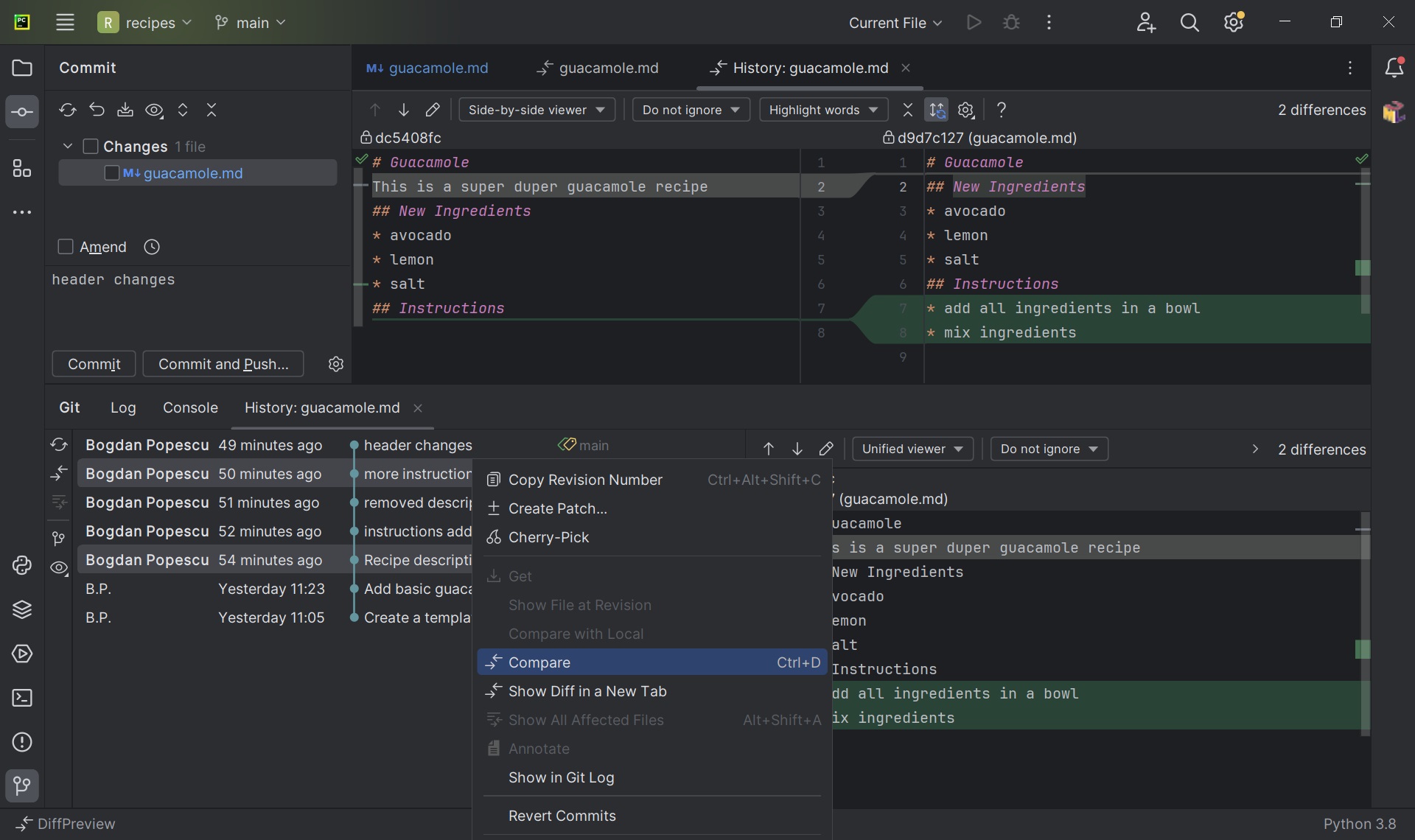

Visually diff-ing in PyCharm:

Visually inspecting commit logs:

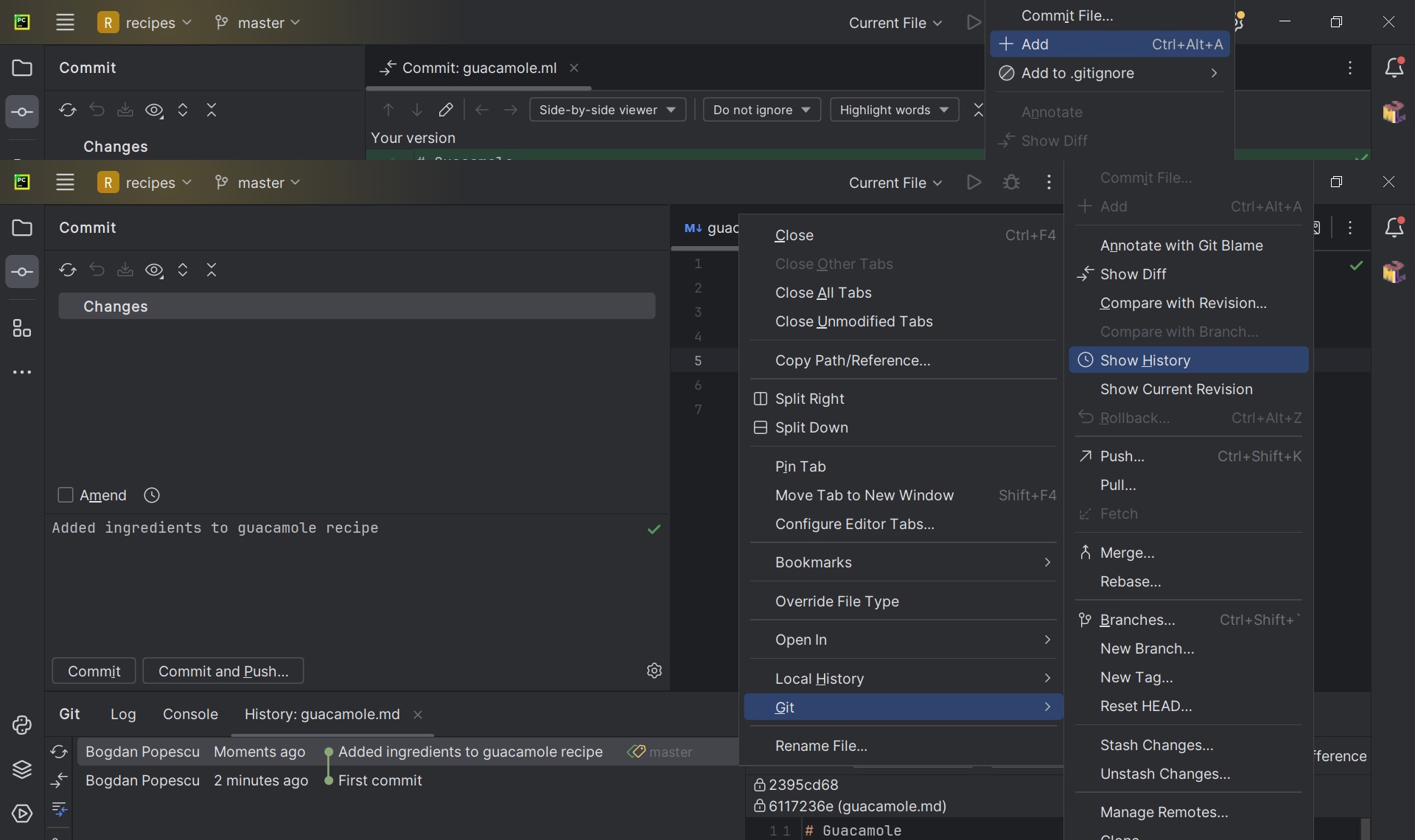

Practice using graphical Git tools

Using the graphical Git tools in PyCharm repeat the basic Git commands explained so far:

- Revert un-staged changes in the

guacamole.mdrecipe. - Revert the

guacamole.mdrecipe to an earlier commit -HEAD~2. - Examine changes between

HEAD~1andHEAD~5.

- Discarding un-staged changes with PyCharm:

- “Revert the

guacamole.mdrecipe to an earlier commit -HEAD~2”:

This was actually a tricky question! PyCharm does not provide a direct GUI option to revert a file to a specific Git revision. This is one example of GUI tools limitations, which shows the importance of understanding and mastering Git from the command line.

- Comparing two Git revisions:

Content from Intermezzo I

Last updated on 2025-07-07 | Edit this page

The focus of the first workshop of this course has been version

control, and an introduction to Git. Some very important Git workflows

have been covered, such as the “modify-add-commit” cycle. However, all

the Git topics covered then were focusing on creating and using a

local repository, stored on the user’s workstation, and

with only one participant - the user herself.

In this second workshop we will take you to the next level in terms of Git functionality - and show you how you can fully harness the power of version control by using Git in a collaborative environment where multiple users can work together on the same project all coordinated through Git workflows. Once we master such advanced Git workflows, we will pivot towards the programming part of this course by looking at Python virtual environments.

To summarize, this is how this second workshop will be organized:

- GitLab - an on-line Git platform

- Short break

- Advanced Git Commands - facilitated by on-line Git platforms such as GitLab

- Lunch break

- Python Virtual Environments

Required Software

In addition to the software we were using in the previous workshop, for today you will also need the following:

- Python (3.12 recommended)

- Access to the TUD GitLab

Content from GitLab

Last updated on 2025-07-08 | Edit this page

Overview

Questions

- What is GitLab?

- How can I find my way around GitLab?

- How can I create, modify and archive a GitLab project?

- How can multiple people collaborate on a project?

Objectives

- Use GitLab’s interface to find a projects/groups.

- Create and customize a project.

- Archive (or delete) a project.

- Connecting a GitLab project with a Git repository.

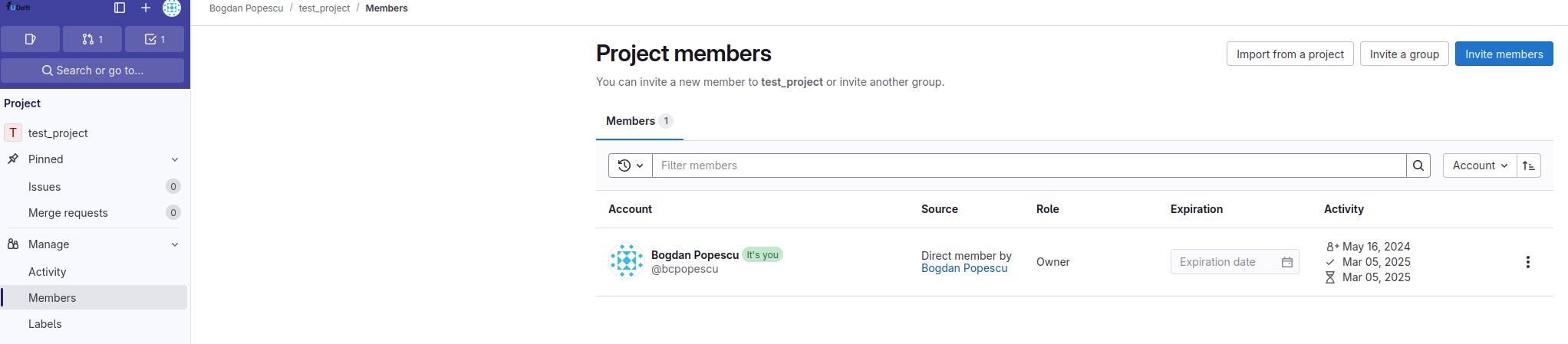

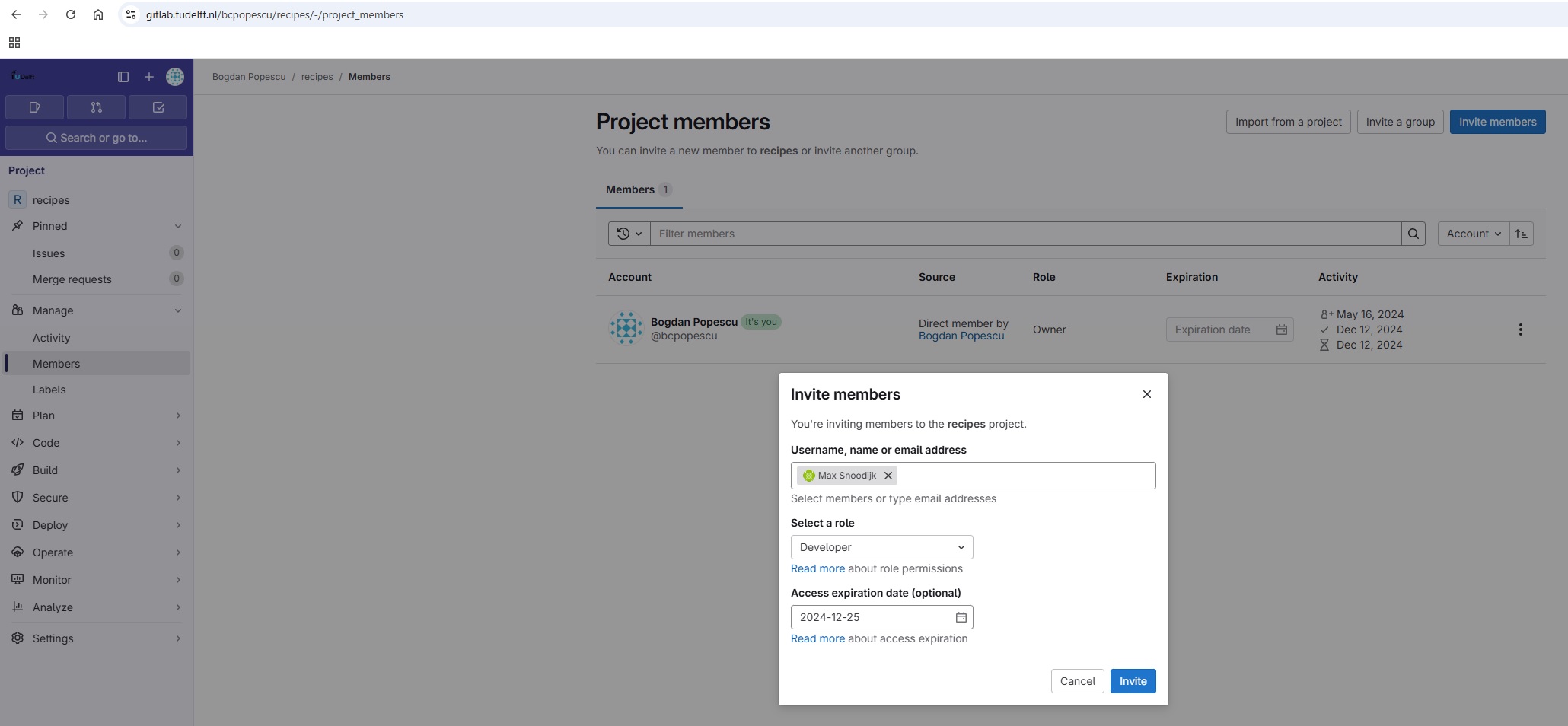

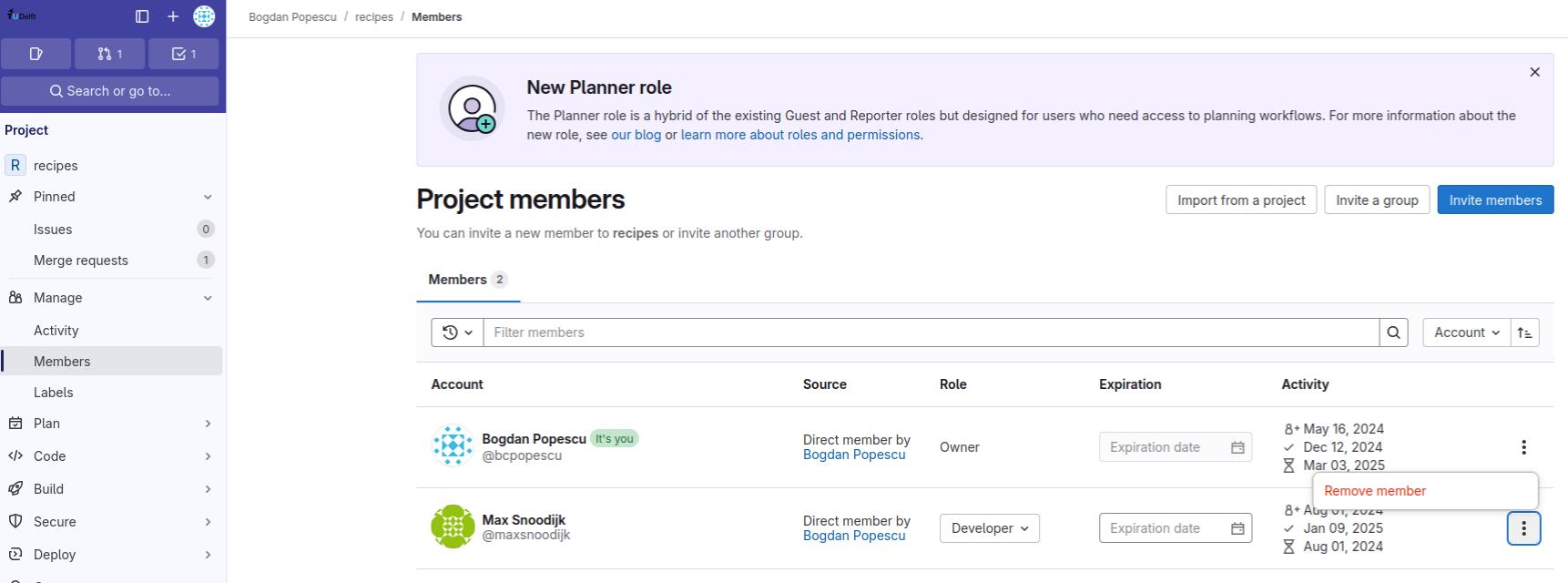

- Explain the concepts of members and roles and adding members to a GitLab project.

- Contribute to a project that you are a member of.

Introduction

GitLab is a web application for managing Git repositories. Since it is build around Git, it is suitable to manage any project that works primarily with plain text files, for example software source code, TeX based documents, or meeting notes in Markdown. With its built-in issue and wiki systems, it can, in certain cases, even be the right tool for managing a project without any files.

This episode will give you a foundational understanding of GitLab’s features, so you can make informed decisions on how to use it as a tool.

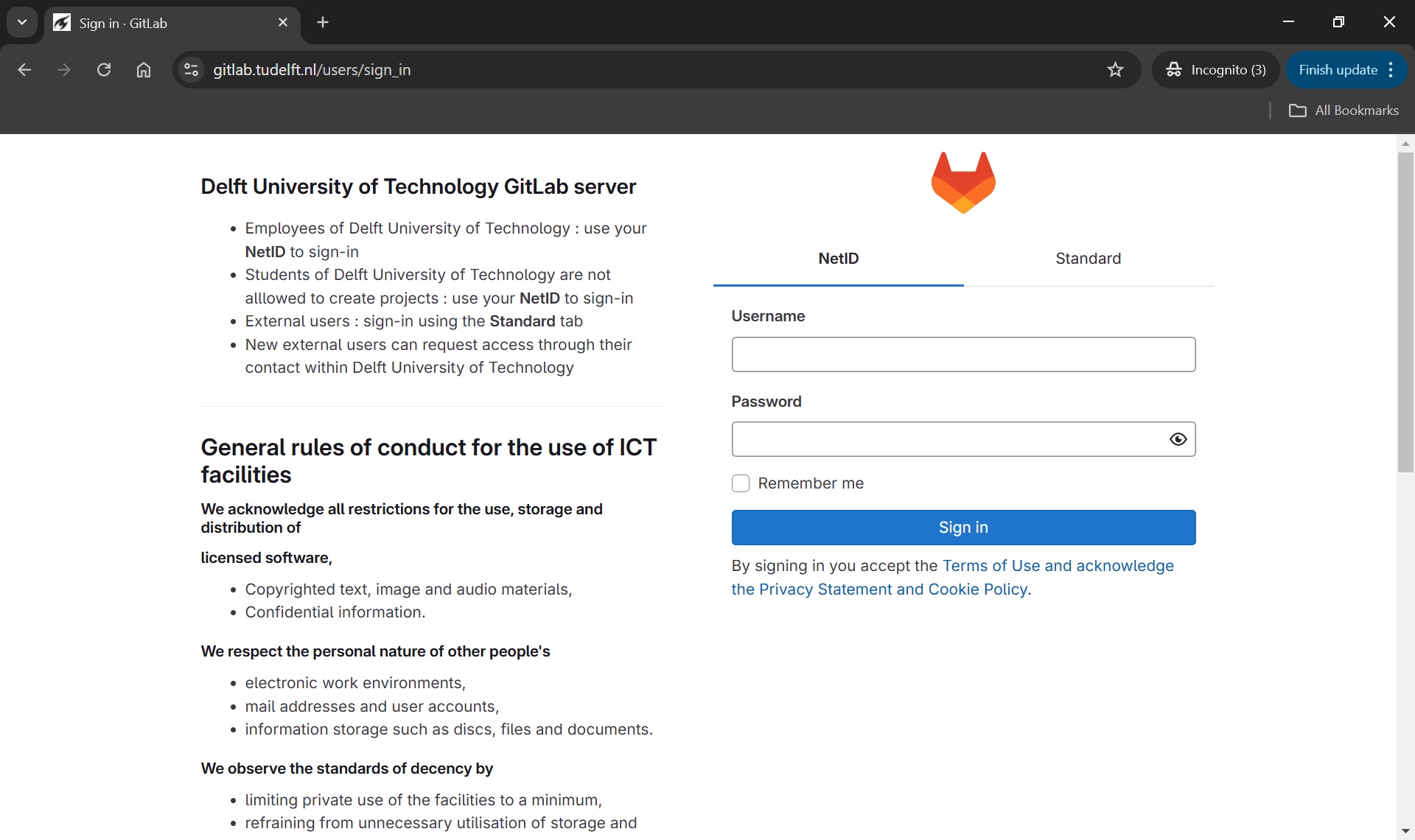

TU Delft is running their own self-hosted GitLab instance. As a QuTech employee, you are entitled to use this TUD GitLab instance; you can login to it with your NetID:

Navigation

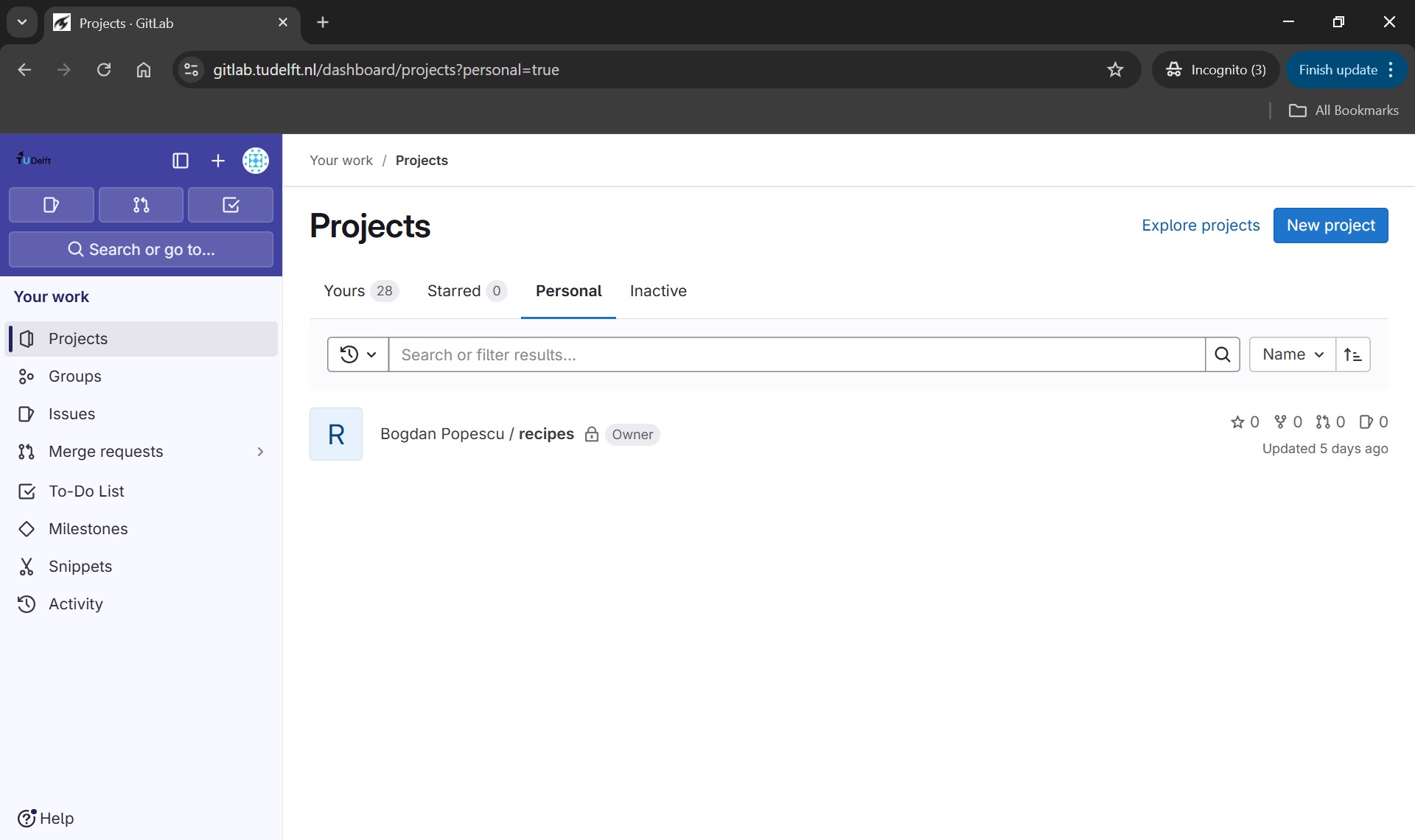

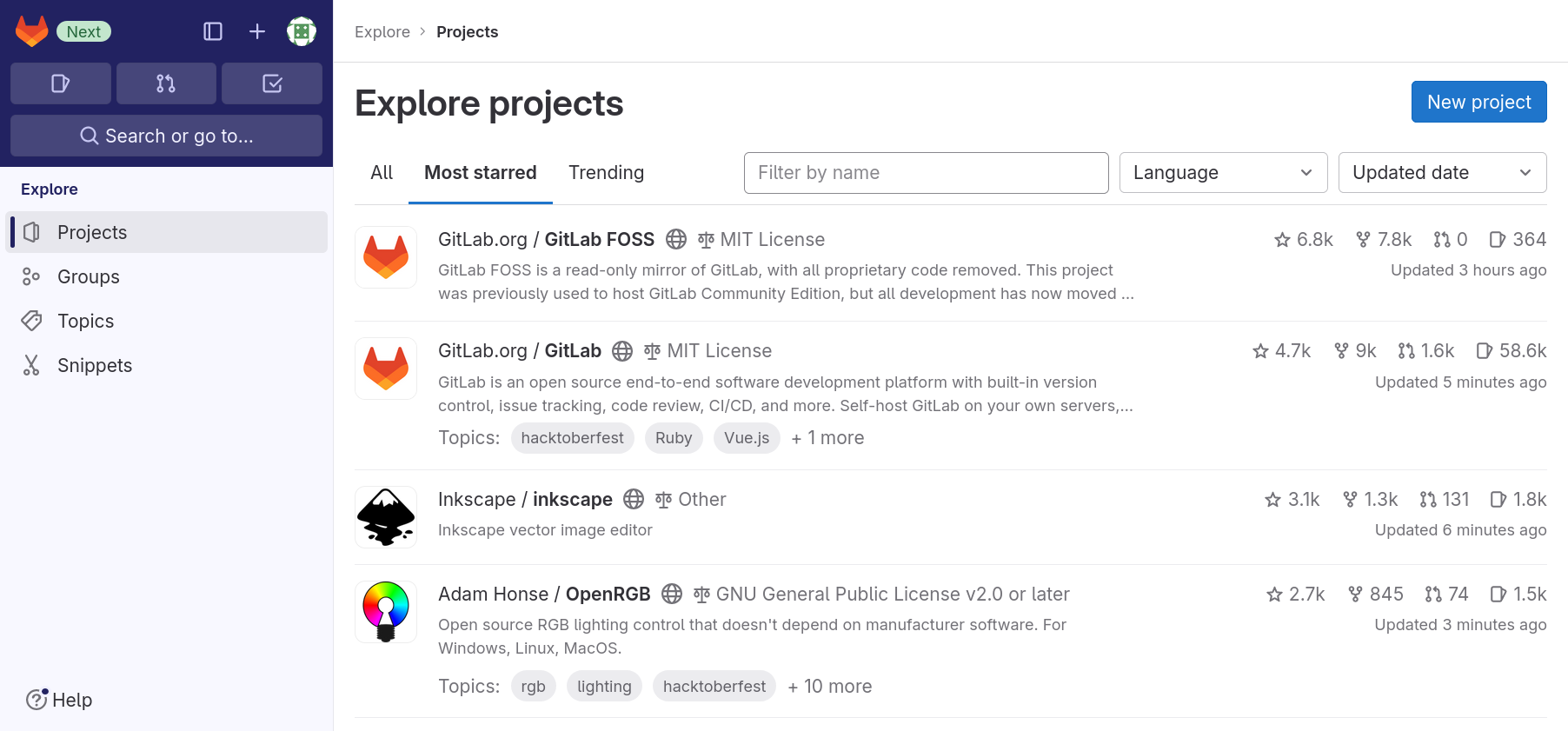

When we log into GitLab for the first time, we are greeted by a welcome page:

GitLab Projects

The “project” is essentially GitLab’s “collaboration unit”. A GitLab project is the central hub where everything related to a specific piece of work (code, discussions, automation, documentation, and tracking) comes together. GitLab projects can be “public” - visible to everyone who can access a given GitLab platform, or “private” - only visible to you (the project owner) and to people you have specifically invited to collaborate on that project. Each GitLab project is associated with a Git repo, which is stored internally by the GitLab platform.

You can browse/explore projects accessible from your GitLab portal, by following the “Explore projects” link:

The first tab, “All”, lists all projects that are visible to you in most recently updated order.

The second tab, “Most starred projects”, also lists all projects, but this time ordered most starred first. Users of a GitLab instance can “star” a project, which is the equivalent of bookmarking them, as we will see shortly.

The third tab, “Trending”, lists all projects again, but this time ordered by activity during the previous months.

You can use the filter field next to the tab names to search through all projects that are visible to you.

Finally, by following the “Groups” link on the left of your portal, you can see the GitLab project groups you have access to.

GitLab Groups

A GitLab ‘group’ is a collection of related projects that share the same members, permissions, and settings. Such groups can be used to organize and manage projects related to the same organization, such as a faculty, department, or research group. As an analogy, you can think of GitLab projects as individual files, while the GitLab groups correspond to the folders/directories containing these files. In this course we will not deal directly with such groups, but you should be aware they exist - as your research team may make use of them to organize their projects.

With the basic GitLab concepts now covered, in the next section we will explain how you can create new GitLab projects and link them to the Git concepts we have covered in the previous episodes.

GitLab Projects

Creating a Project

After knowing how to find groups and projects, we want to go from passively browsing GitLab to actively using it. We are going to create a project and connect a local Git repository to it.

To create a project, we click on the “New project” button on the upper-right corner of the right tab.

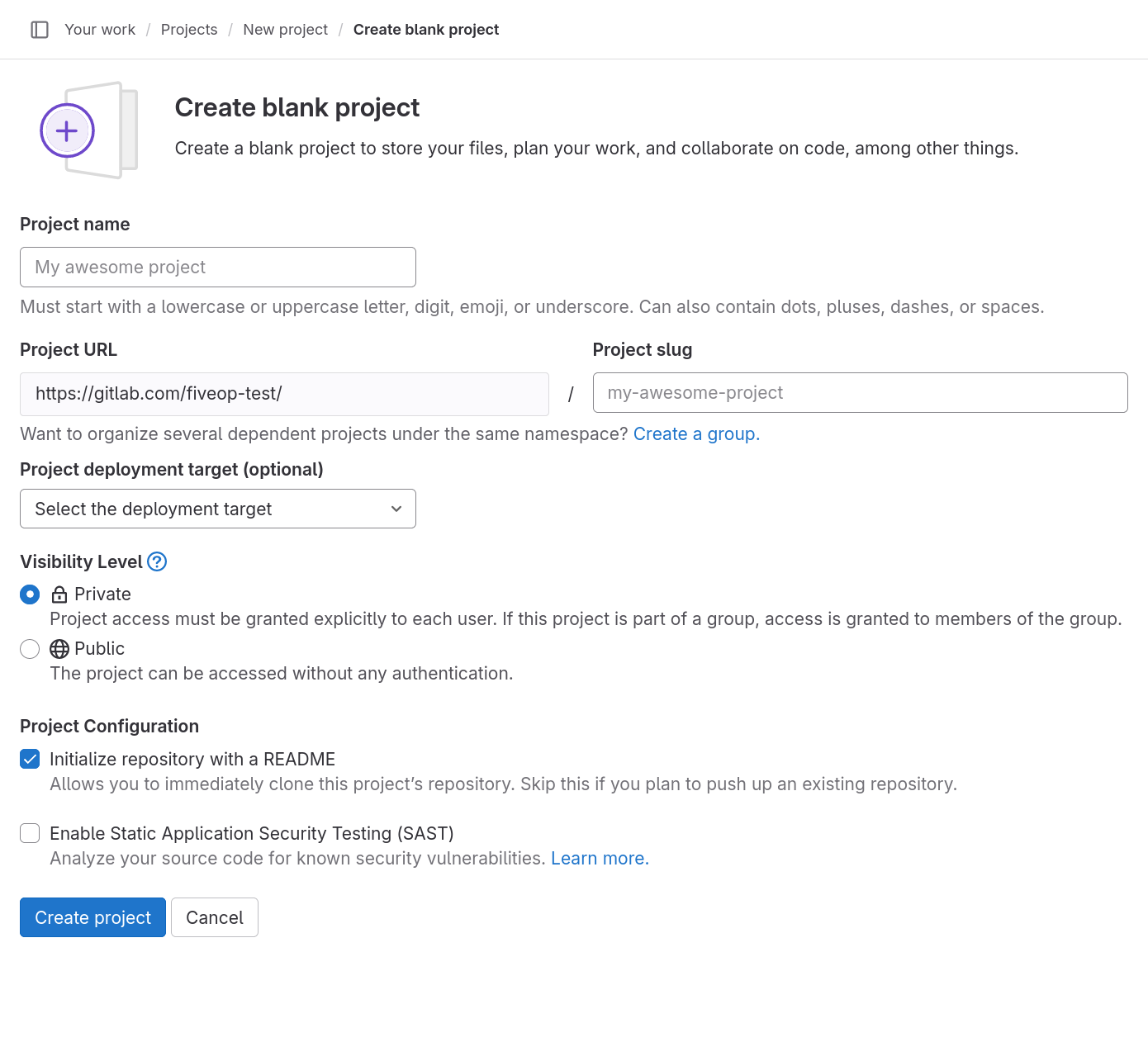

Multiple options are presented for how to create the new project. In this lesson we will only look at, and use, the first option: “Create blank project”. So click on that.

This leads to the following page:

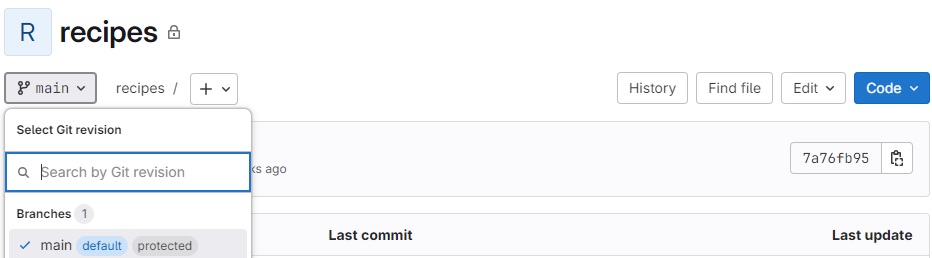

The “Project name” field is for just that, the project name. Its value has no other purpose and can be changed at anytime without indirect consequences (the direct consequence being, that its name will be different). Following the example in the previous episodes, we will call it “recipes”.

As we fill in the project name, a project slug gets suggested in the respective field. The project slug is the last part of the project’s, and the associated Git repository’s, URL or web address.

If the user belongs to at least one group, the URL’s middle part can be chosen in the drop-down field labeled “Project URL”, which defaults to the current user’s username. The default, indicated by the user’s name, is the location for a user’s projects (and groups). Other options in the drop-down list are the groups in which the current user may create projects.

Project URL and Project Slug

The two fields under labels “Project URL” and “Project slug” are the only fields in this form for which changing the value later might cause problems. They determine the URL under which the project’s pages and the project’s Git repository can be found, so changing them later might brake links and bookmarks as well as connections from Git repositories on other systems, for example on contributors’ machines.

We ignore the field labeled “Project deployment target (optional)”.

The choice under label “Visibility Level” determines the project’s visibility.

Visiblity

GitLab offers three settings for the visibility of a project: public, internal, and private. Publicly visible projects can be looked at by anyone that can access the GitLab instance, projects with internal visibility can be looked at by anyone logged in to the instance, while projects with private visibility can only be looked at by its members.

On GitLab.com the visibility “internal” is disabled. Everyone can create an account, log in to the instance, and thus could look at any project of internal visibility anyway.

Self-hosted instances might also disable some visibility types. For example, the public visibility might be disabled, to prevent users from publishing something to the whole internet.

We choose “Private” for our project’s visibility.

If, as per default, the checkbox “Initialize repository with a

README” is checked, the project’s repository will be initialized with a

commit that adds a file called README.md. Otherwise, a the

project will start with an empty repository. We will add such a file

later ourselves, so we uncheck the box.

README

A project’s README file usually contains basic information about the project: what it contains, how it can be used (for example built or installed, if it is a software project), how to contribute, how to get help, and licensing information.

It is common to write README files in Markdown format, indicated by

the filename suffix .md.

Platforms like GitLab show the contents of a project’s README file on its homepage; if it is in Markdown format, in its rendered form.

We will ignore any other fields that may be visible depending on the GitLab instances configuration.

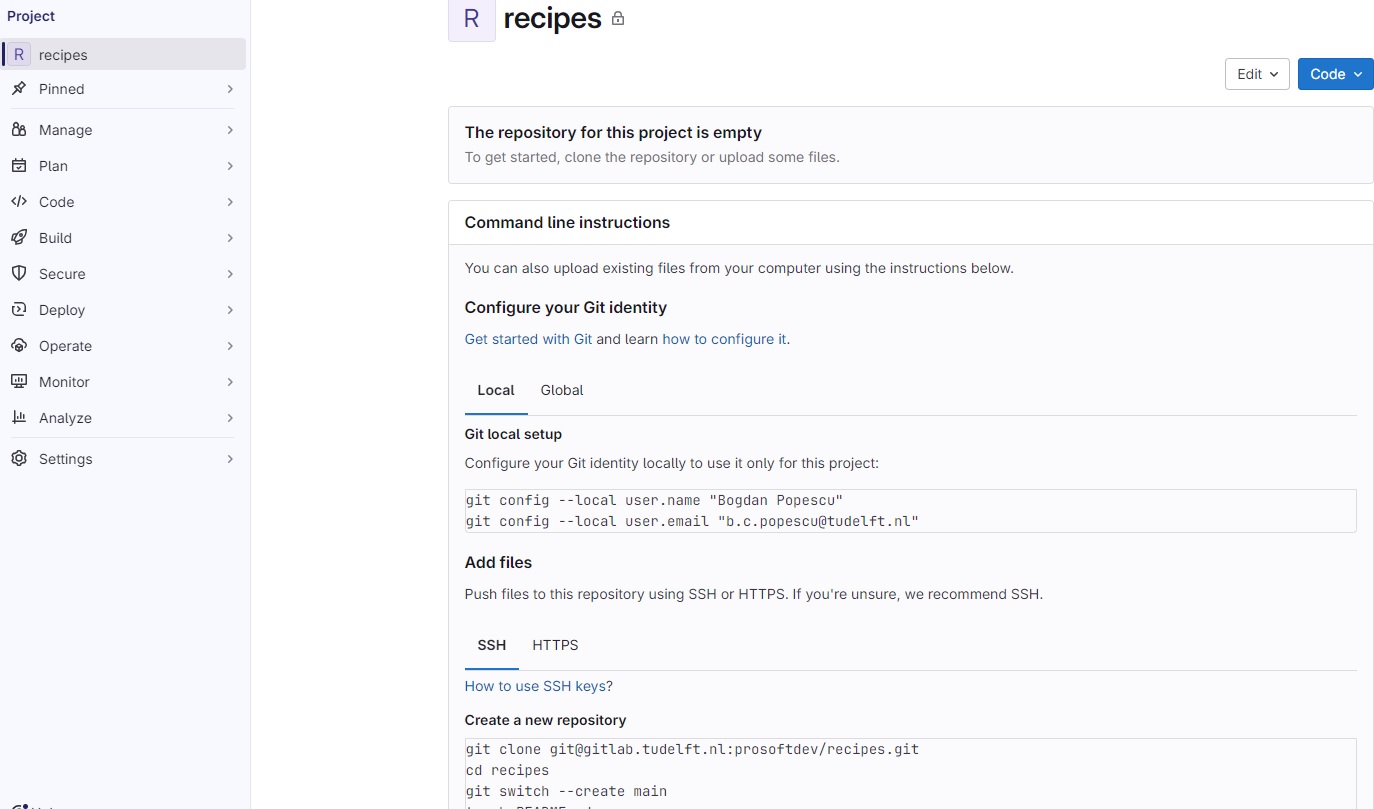

After clicking the “Create project” button, GitLab creates the project and redirects us to the project’s homepage, which looks similar to this:

The page is split into the menu on the left and the project overview on the right.

The menu leads to pages related to various properties, processes, and content of the project. It is visible on each of these pages. The number of menu entries may seem overwhelming, in particular when one notices that the entries have sub-entries. However, it is not necessary to know what hides behind all these entries to use GitLab. Following this lesson, we will get to know parts of what lies behind the entries “Manage”, “Code”, and “Settings”.

The project overview shows (from the top): The project’s avatar (or icon) and name; a prompt to invite team members (we will cover members later on); a statement that our repository is currently empty with buttons for several options to add content to it; and finally the beginning of the instructions on how to push a local repository to this project’s repository to fill it with content. We will follow them in just a bit.

The project overview page will look slightly different, once we have content in its repository. We will have another look at the page then.

Change Name, Description, Visibility, or Avatar

Click on the “Settings” sub-menu and select its “General” entry. Set the description to something meaningful, such as “A collection of simple recipes”.

The project description appears in many lists and on some pages under the project’s name.

Then change any of the project’s name, visibility, or avatar. Do not forget to click the “Save changes” button once you are done.

This exercise should take about 5 minutes.

Markdown

Markdown is a markup language like HTML, on which the World Wide Web is based, or wikitext, which is used to write Wikipedia’s content. Its markup directives, indicating for example that something is a headline or a list item, are such that they serve their purpose even in the plain text form.

The project overview page presents us with many options to add content to the project’s repository directly in GitLab. We will add our first file to the project repository in the next section.

Archiving a Project

We just went through the beginning of a GitLab project’s life cycle. At its end, if it has one, a project gets archived (or deleted). We will now go through the process of archiving a project, without completing the procedure.

Using the menu on the left, we navigate to the project’s “General” settings.

At the bottom of the page, we find a section named “Advanced”. We click on the “Expand” button right next to it and scroll down the page.

Notice that some of buttons here are not the usual blue or white, but rather red. This indicates that we should be careful about clicking them. Things might break or get deleted.

Scrolling back up, we find a section labeled “Archive Project”. Clicking the button will not delete the project. Instead it will be placed in a read-only mode. Everything will be preserved but nothing can be changed anymore. In addition, the project no longer shows up in search results and on the page for exploring projects.

Most of the time archiving a project is preferable to deleting it, in particular when it comes to research projects. Do not archive the project now! We will work with it throughout this lesson.

At the bottom of the page is also a section for deleting a project, in case you ever think that is the right thing to do.

Connecting GitLab Projects with Local Git Repositories

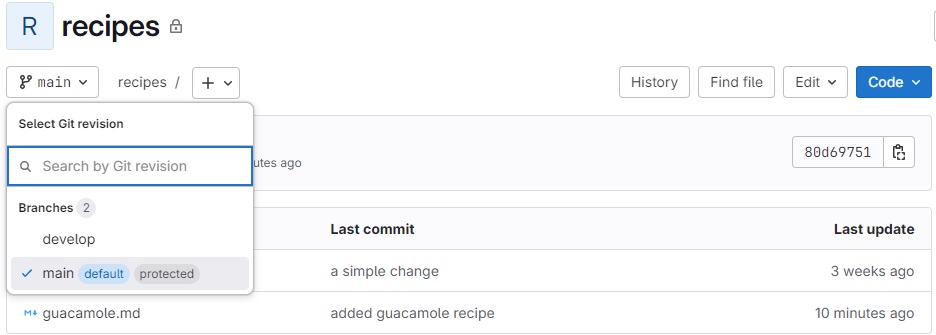

Remember from an earlier lesson that we have created a local Git repository that looked like this:

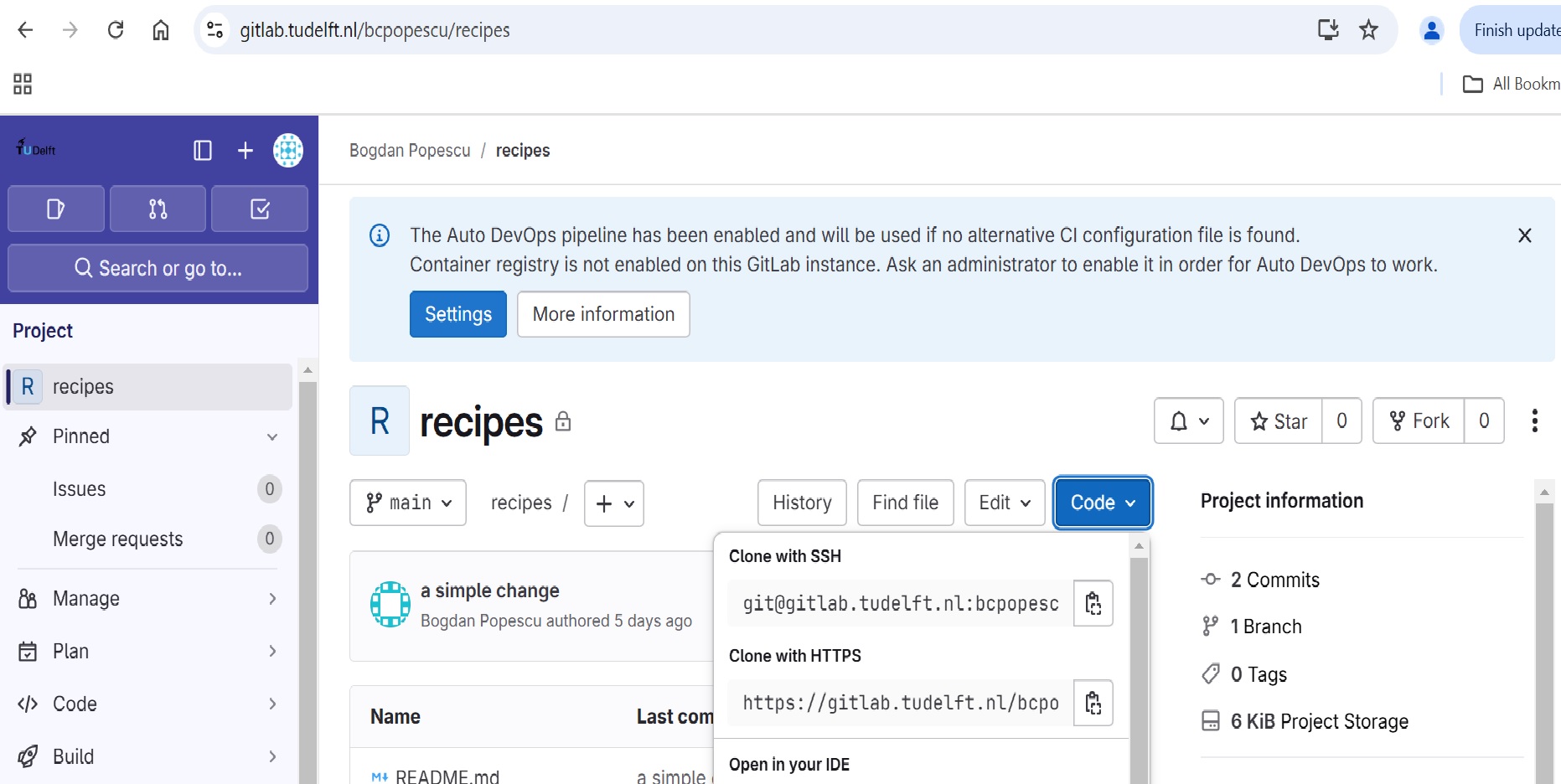

Now we want to have a GitLab project that will be coupled with this local Git repo. The first step here is to follow the steps described in the previous section, and create a new GitLab project called ‘recipes’. Initially the Git repo for this GitLab project will be empty, as shown in the diagram below:

Connecting the local to remote repository

Now we connect the two repositories. We do this by making the GitLab

repository a remote for the local

repository. Go to the home page of the repository on GitLab, click on

the blue Code button, and copy the string below the

Clone with SSH

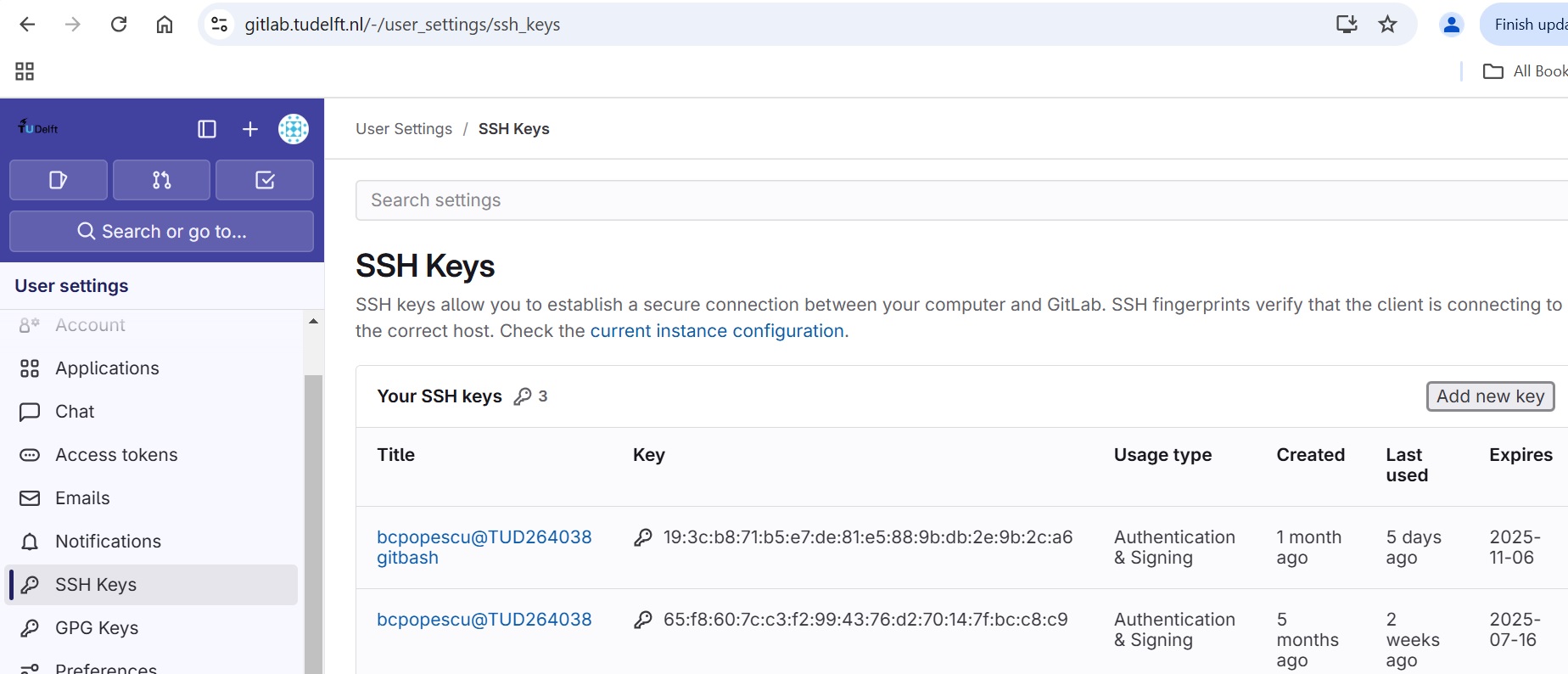

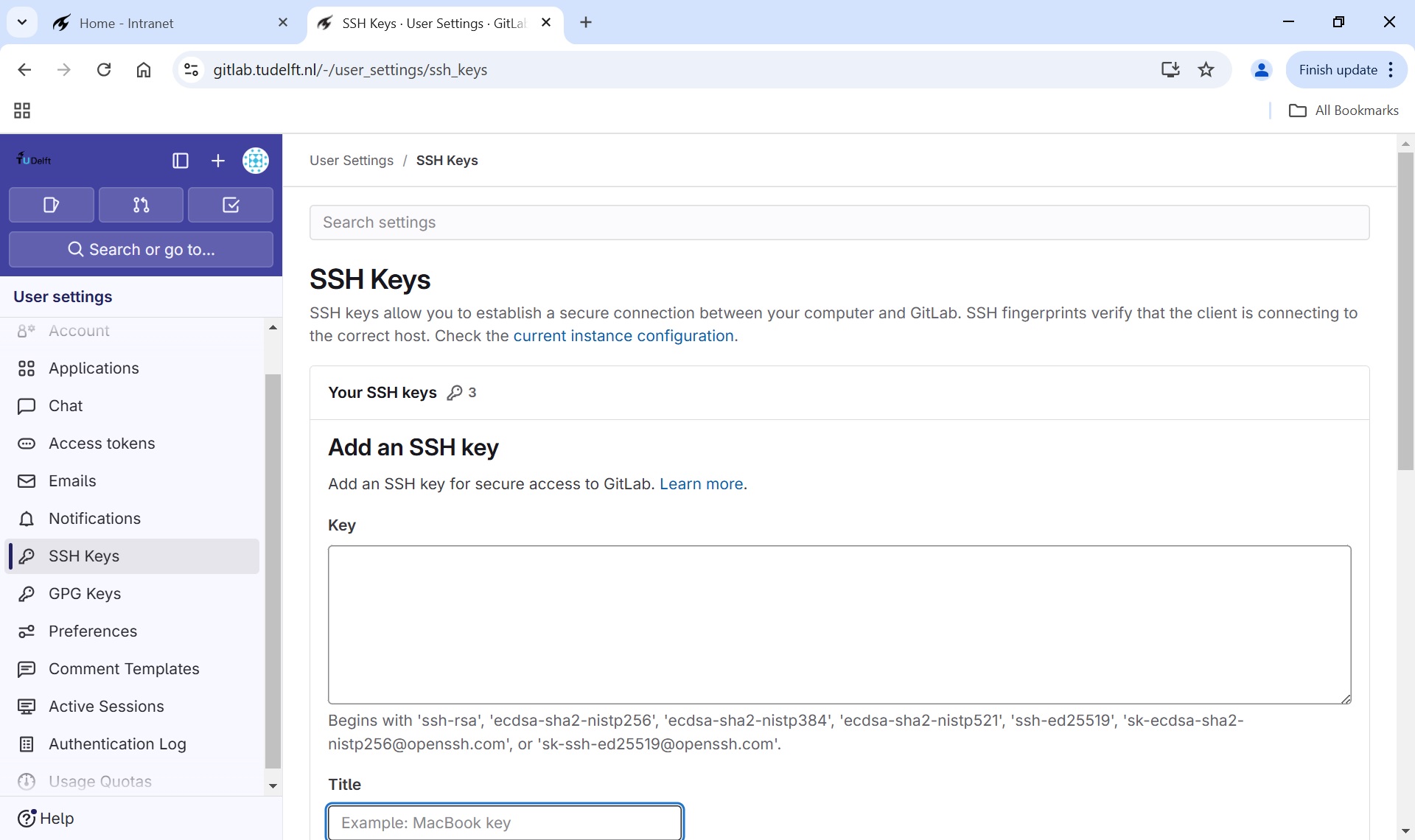

HTTPS vs. SSH

We use SSH here because, while it requires some additional configuration, it is a security protocol widely used by many applications. The steps below describe SSH at a minimum level for GitLab.

With the Clone with SSH string you copied from the

GitLab page, go into the local recipes repository, and run

this command:

Make sure to use the URL for your repository rather than Alfredo’s:

the only difference should be your username instead of

alflin.

origin is a local name used to refer to the remote

repository. It could be called anything, but origin is a